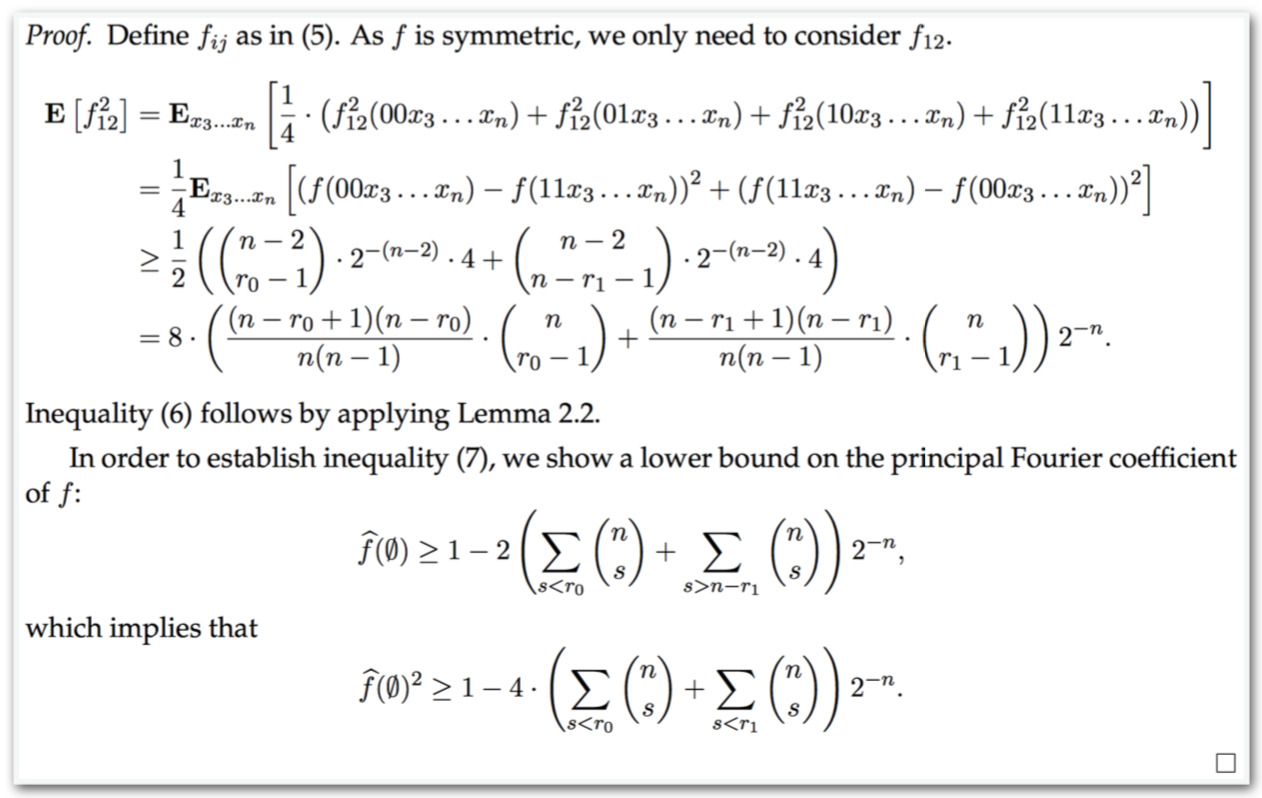

Show that \(2^n > n\) for all integers \(n \geq 1\).

(Line numbers are added for easy referencing.)

\(F_n =\) “\(2^n > n\)”

\(F_1 =\) “\(2 > 1\)” \(\checkmark\)

\(F_n \implies F_{n+1}:\)

\(2^{n+1} = 2 \cdot 2^n > 2\cdot n \text{ (induction) } \geq n+1\) because \(n \geq 1\)

Therefore proved.

Is the basic structure right?

Are you starting the right way?

Did you read your proof out loud?

Is the purpose of every sentence clear?

Are you giving the right level of detail?

Should you break up your proof?

Does your proof type-check?

Are your references clear?

Where are you using the assumptions?

Is the proof idea clear?

\(F_n =\) “\(2^n > n\)”

\(F_1 =\) “\(2 > 1\)” \(\checkmark\)

\(F_n \implies F_{n+1}:\)

\(2^{n+1} = 2 \cdot 2^n > 2\cdot n \text{ (induction) } \geq n+1\) because \(n \geq 1\)

Therefore proved.

We will prove that for all integers \(n \geq 1\), \(2^n > n\). The proof is by induction on \(n\).

Let’s start with the base case which corresponds to \(n=1\). In this case, the inequality \(2^n > n\) translates to \(2^1 > 1\), which is indeed true.

To carry out the induction step, we want to argue that for all \(n \geq 1\), \(2^n > n\) implies \(2^{n+1} > n+1\). We do so now. For an arbitrary \(n \geq 1\), assume \(2^n > n\). Multiplying both sides of the inequality by \(2\), we get \(2^{n+1} > 2n\). Note that since we are assuming \(n \geq 1\), we have \(2n = n+n \geq n+1\). Therefore, we can conclude that \(2^{n+1} > n + 1\), as desired.

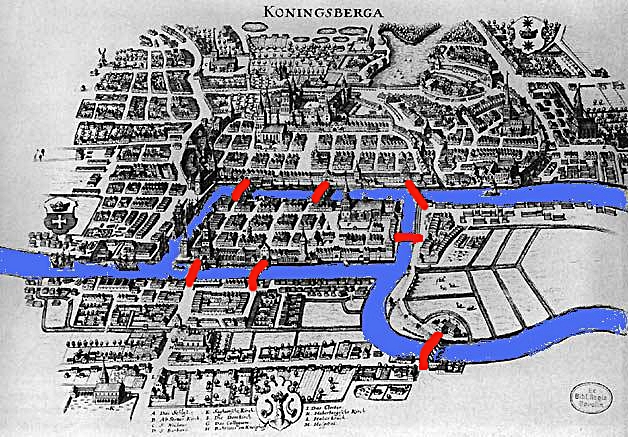

If you line up any number of dominoes in a row, and knock the first one over, then all the dominoes will fall.

If you line up an infinite row of dominoes, one domino for each natural number, and you knock the first one over, then all the dominoes will fall.

Every natural number greater than \(1\) can be factored into primes.

Set up a proof by contradiction.

Let \(m\) be the minimum number such that \(S_m\) is not true.

Show that \(S_k\) is not true for \(k < m\), which is the desired contradiction.

At any party, at any point in time, define a person’s parity as odd or even according to the number of hands they have shaken. Then the number of people with odd parity must be even.

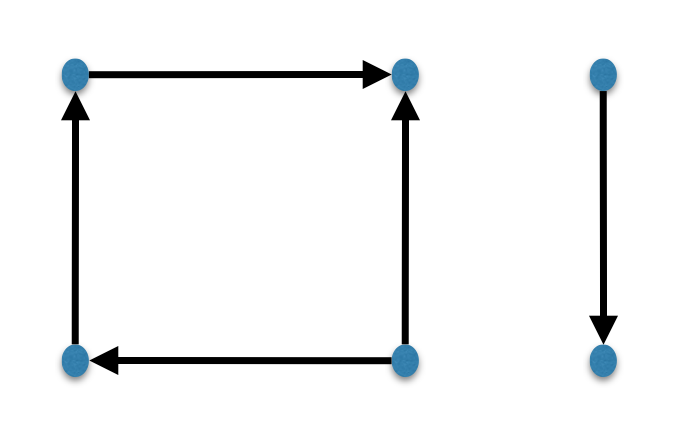

We have a time-varying world state: \(W_0, W_1, W_2, \ldots\).

The goal is to prove that some statement \(S\) is true for all world states.

Argue:

Statement \(S\) is true for \(W_0\).

If \(S\) is true for \(W_k\), then it remains true for \(W_{k+1}\).

Fix some finite set of symbols \(\Sigma\). We define a string over \(\Sigma\) recursively as follows.

Base case: The empty sequence, denoted \(\epsilon\), is a string.

Recursive rule: If \(x\) is a string and \(a \in \Sigma\), then \(ax\) is a string.

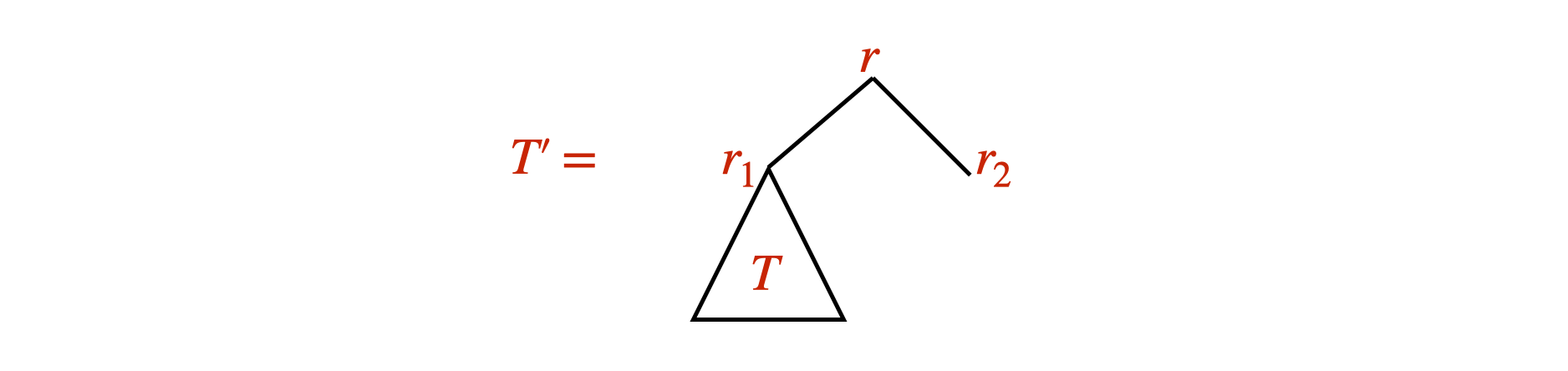

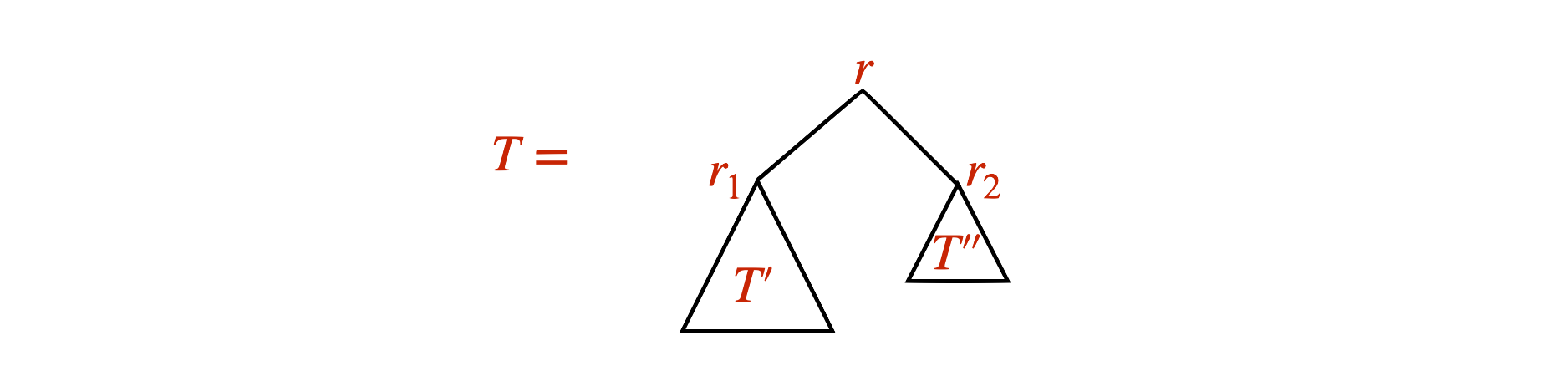

We define a binary tree recursively as follows.

Base case: A single node \(r\) is a binary tree with root \(r\).

Recursive rule: If \(T'\) and \(T''\) are binary trees with roots \(r_1\) and \(r_2\), then \(T\), which has a node \(r\) adjacent to \(r_1\) and \(r_2\) is a binary tree with root \(r\).

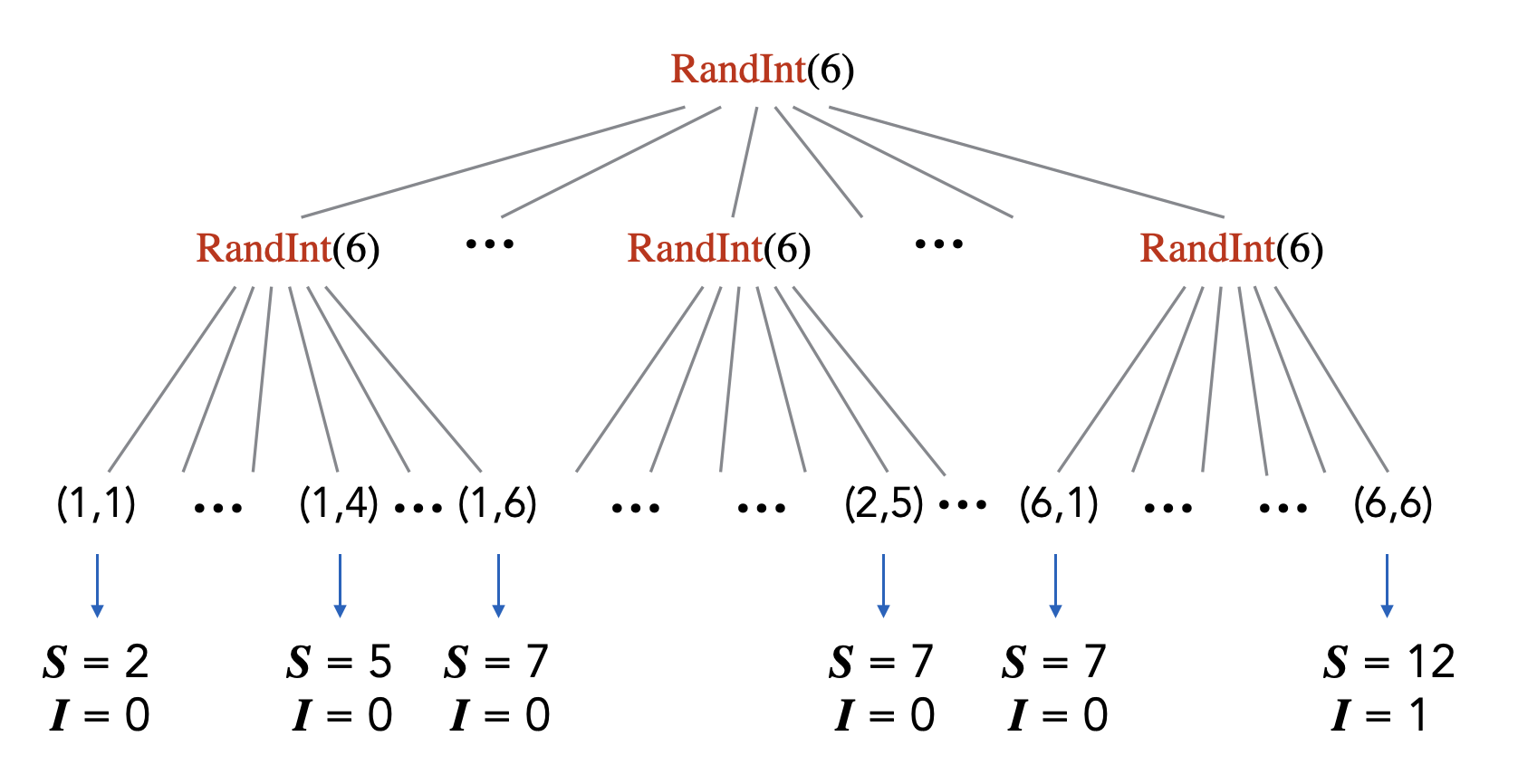

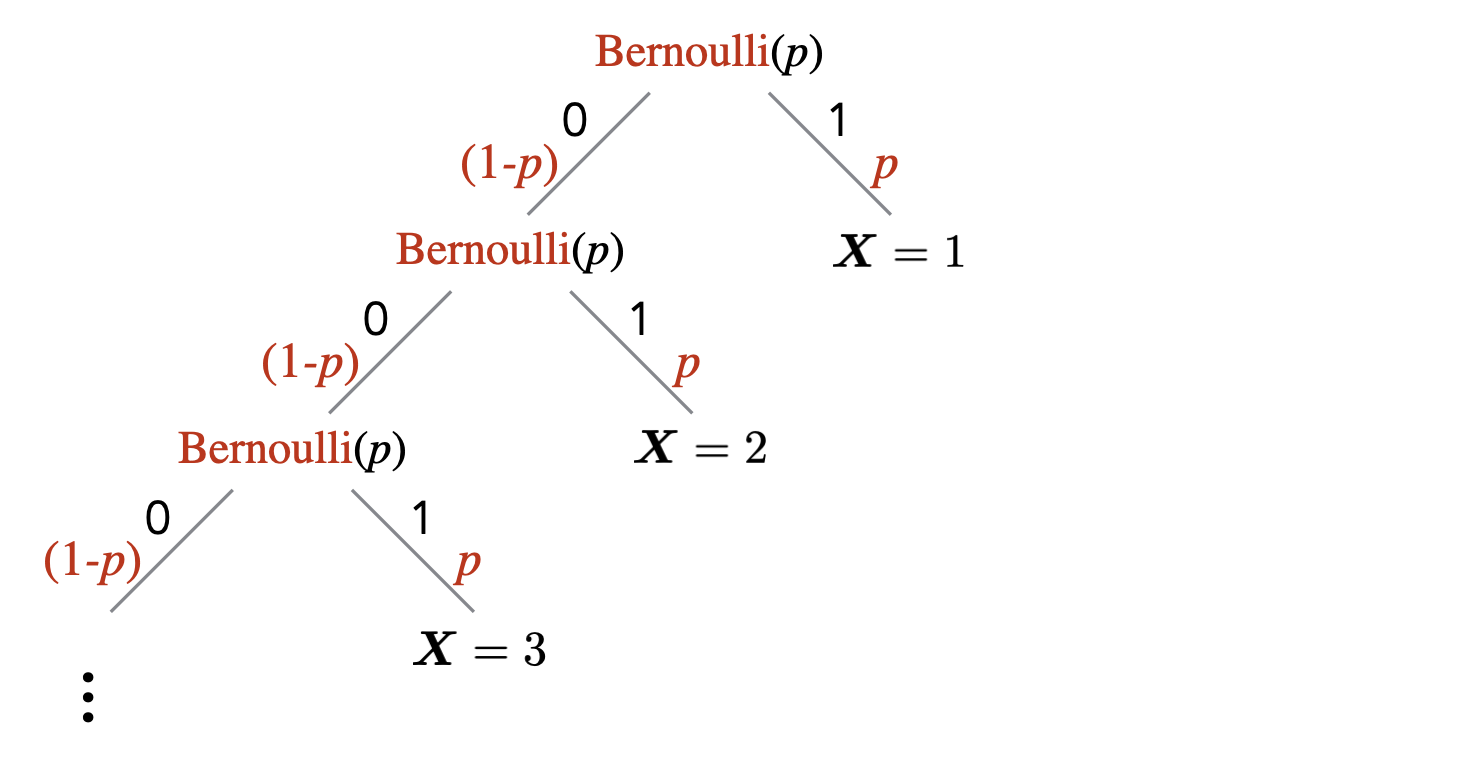

Let \(T\) be a binary tree. Let \(L_T\) be the number of leaves in \(T\) and let \(I_T\) be the number of internal nodes in \(T\). Then \(L_T = I_T + 1\).

You have a recursively defined set of objects, and you want to prove a statement \(S\) about those objects.

Check that \(S\) is true for the base case(s) of the recursive definition.

Prove \(S\) holds for “new” objects created by the recursive rule, assuming it holds for “old” objects used in the recursive rule.

We prove statement \(S\) by induction on the height of a tree. Let’s first check the base case... and it is true.

For the induction step, take an arbitrary binary tree \(T\) of height \(h\). Let \(T'\) be the following tree of height \(h+1\):

[Some argument showing why \(S\) is true for \(T'\)] Therefore we are done.

Let \(L\) be a set of binary strings (i.e. strings over \(\Sigma = \{{\texttt{0}}, {\texttt{1}}\}\)) defined recursively as follows:

(base case) the empty string, \(\epsilon\), is in \(L\);

(recursive rule) if \(x, y \in L\), then \({\texttt{0}}x{\texttt{1}}y{\texttt{0}} \in L\).

This means that every string in \(L\) is derived starting from the base case, and applying the recursive rule a finite number of times. Show that for any string \(w \in L\), the number of \({\texttt{0}}\)’s in \(w\) is exactly twice the number of \({\texttt{1}}\)’s in \(w\).

In an induction argument on recursively defined objects, if you say that you will use structural induction, then the assumption is that the parameter being inducted on is the minimum number of applications of the recursive rules needed to create an object. And in this case, explicitly stating the parameter being inducted on, or the induction hypothesis, is not needed.

An alphabet is a non-empty, finite set, and is usually denoted by \(\Sigma\). The elements of \(\Sigma\) are called symbols or characters.

Given an alphabet \(\Sigma\), a string (or word) over \(\Sigma\) is a (possibly infinite) sequence of symbols, written as \(a_1a_2a_3\ldots\), where each \(a_i \in \Sigma\). The string with no symbols is called the empty string and is denoted by \(\epsilon\).

In our notation of a string, we do not use quotation marks. For instance, we write \({\texttt{1010}}\) rather than “\({\texttt{1010}}\)”, even though the latter notation using the quotation marks is the standard one in many programming languages. Occasionally, however, we may use quotation marks to distinguish a string like “\({\texttt{1010}}\)” from another type of object with the representation \(1010\) (e.g. the binary number \(1010\)).

The length of a string \(w\), denoted \(|w|\), is the number of symbols in \(w\). If \(w\) has an infinite number of symbols, then the length is undefined.

Let \(\Sigma\) be an alphabet. We denote by \(\Sigma^*\) the set of all strings over \(\Sigma\) consisting of finitely many symbols. Equivalently, using set notation, \[\Sigma^* = \{a_1a_2\ldots a_n : \text{ $n \in \mathbb N$, and $a_i \in \Sigma$ for all $i$}\}.\]

We will use the words “string” and “word” to refer to a finite-length string/word. When we want to talk about infinite-length strings, we will explicitly use the word “infinite”.

By Definition (Alphabet, symbol/character), an alphabet \(\Sigma\) cannot be the empty set. This implies that \(\Sigma^*\) is an infinite set since there are infinitely many strings of finite length over a non-empty \(\Sigma\). We will later see that \(\Sigma^*\) is always countably infinite.

The reversal of a string \(w = a_1a_2\ldots a_n\), denoted \(w^R\), is the string \(w^R = a_na_{n-1}\ldots a_1\).

The concatenation of strings \(u\) and \(v\) in \(\Sigma^*\), denoted by \(uv\) or \(u \cdot v\), is the string obtained by joining together \(u\) and \(v\).

For \(n \in \mathbb N\), the \(n\)’th power of a string \(u\), denoted by \(u^n\), is the word obtained by concatenating \(u\) with itself \(n\) times.

We say that a string \(u\) is a substring of string \(w\) if \(w = xuy\) for some strings \(x\) and \(y\).

For strings \(x\) and \(y\), we say that \(y\) is a prefix of \(x\) if there exists a string \(z\) such that \(x = yz\). If \(z \neq \epsilon\), then \(y\) is a proper prefix of \(x\).

We say that \(y\) is a suffix of \(x\) if there exists a string \(z\) such that \(x = zy\). If \(z \neq \epsilon\), then \(y\) is a proper suffix of \(x\).

Let \(A\) be a set and let \(\Sigma\) be an alphabet. An encoding of the elements of \(A\), using \(\Sigma\), is an injective function \(\text{Enc}: A \to \Sigma^*\). We denote the encoding of \(a \in A\) by \(\left \langle a \right\rangle\).

If \(w \in \Sigma^*\) is such that there is some \(a \in A\) with \(w = \left \langle a \right\rangle\), then we say \(w\) is a valid encoding of an element in \(A\).

Describe an encoding of \(\mathbb Z\) using the alphabet \(\Sigma = \{{\texttt{1}}\}\).

Let \(\Sigma\) be an alphabet. Any function \(f: \Sigma^* \to \Sigma^*\) is called a function problem over the alphabet \(\Sigma\).

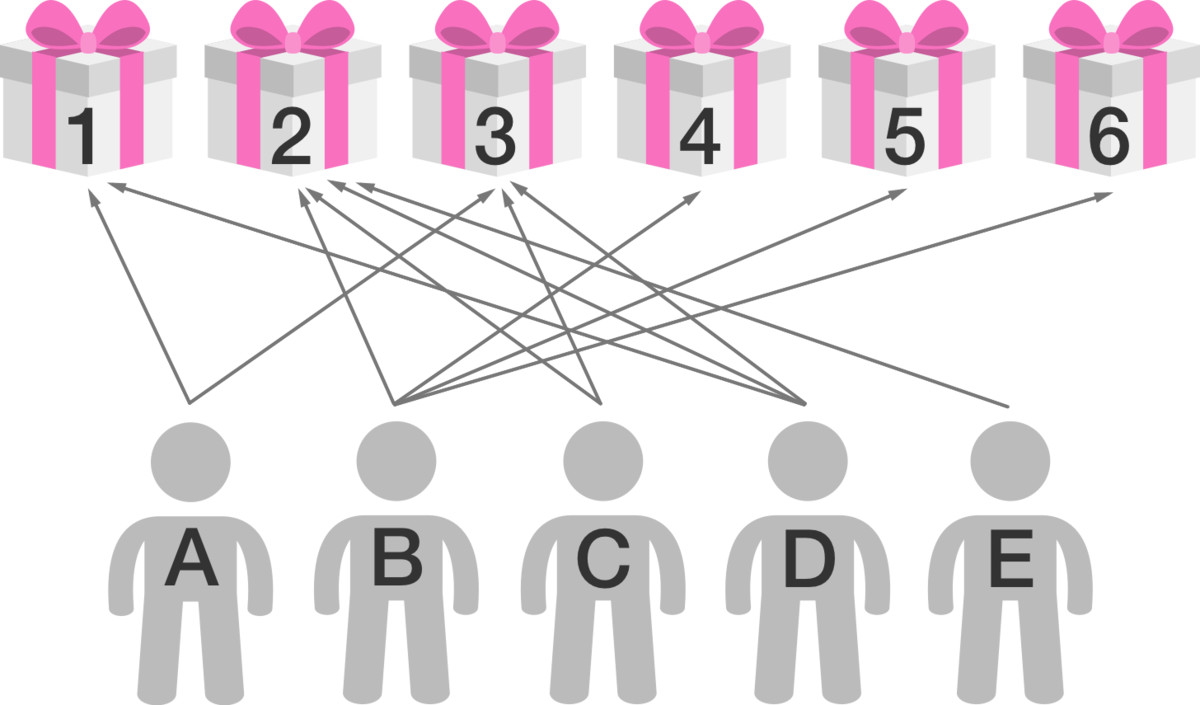

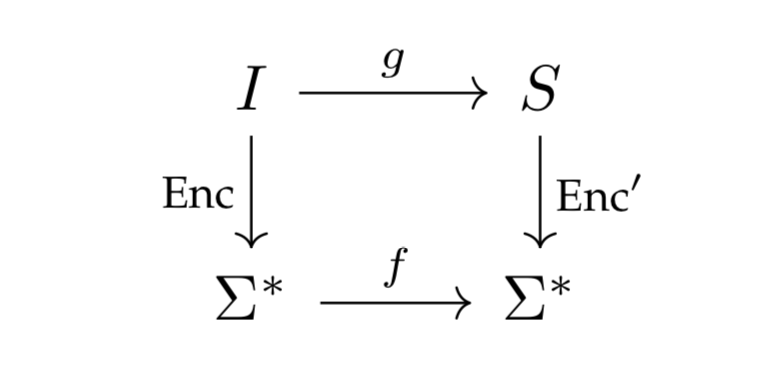

A function problem is often derived from a function \(g: I \to S\), where \(I\) is a set of objects called instances and \(S\) is a set of objects called solutions. The derivation is done through encodings \(\text{Enc}: I \to \Sigma^*\) and \(\text{Enc}': S \to \Sigma^*\). With these encodings, we can create the function problem \(f : \Sigma^* \to \Sigma^*\). In particular, if \(w = \left \langle x \right\rangle\) for some \(x \in I\), then we define \(f(w)\) to be \(\text{Enc}'(g(x))\).

One thing we have to be careful about is defining \(f(w)\) for a word \(w \in \Sigma^*\) that does not correspond to an encoding of an object in \(I\) (such a word does not correspond to an instance of the function problem). To handle this, we can identify one of the strings in \(\Sigma^*\) as an error string and define \(f(w)\) to be that string.

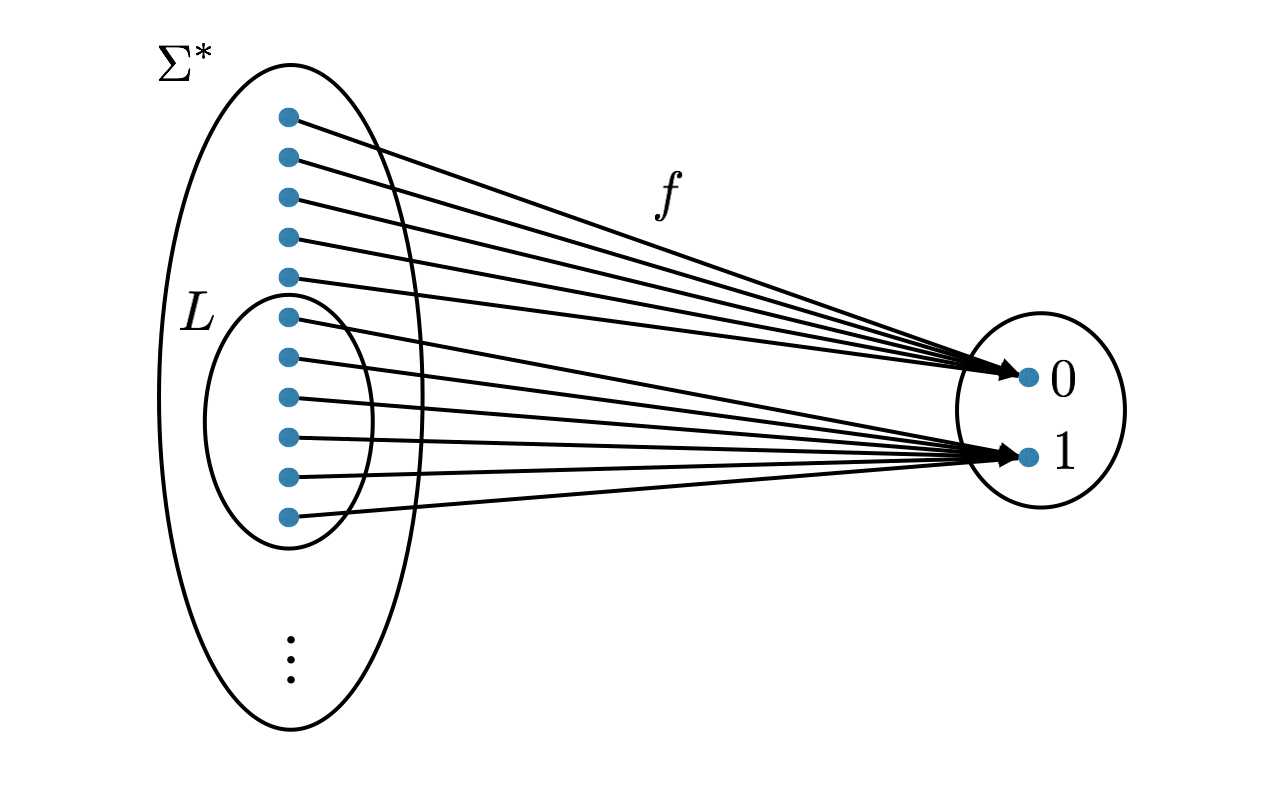

Let \(\Sigma\) be an alphabet. Any function \(f: \Sigma^* \to \{0,1\}\) is called a decision problem over the alphabet \(\Sigma\). The co-domain of the function is not important as long as it has two elements. One can think of the co-domain as \(\{\text{No}, \text{Yes}\},\) \(\{\text{False}, \text{True}\}\) or \(\{ \text{Reject}, \text{Accept}\}\).

As with a function problem, a decision problem is often derived from a function \(g: I \to \{0,1\}\), where \(I\) is a set of instances. The derivation is done through an encoding \(\text{Enc}: I \to \Sigma^*\), which allows us to define the decision problem \(f: \Sigma^* \to \{0,1\}\). Any word \(w \in \Sigma^*\) that does not correspond to an encoding of an instance is mapped to \(0\) by \(f\).

Instances that map to \(1\) are often called yes-instances and instances that map to \(0\) are often called no-instances.

Any (possibly infinite) subset \(L \subseteq \Sigma^*\) is called a language over the alphabet \(\Sigma\).

Since a language is a set, the size of a language refers to the size of that set. A language can have finite or infinite size. This is not in conflict with the fact that every language consists of finite-length strings.

The language \(\{\epsilon\}\) is not the same language as \(\varnothing\). The former has size \(1\) whereas the latter has size \(0\).

There is a one-to-one correspondence between decision problems and languages. Let \(f:\Sigma^* \to \{0,1\}\) be some decision problem. Now define \(L \subseteq \Sigma^*\) to be the set of all words in \(\Sigma^*\) that \(f\) maps to 1. This \(L\) is the language corresponding to the decision problem \(f\). Similarly, if you take any language \(L \subseteq \Sigma^*\), we can define the corresponding decision problem \(f:\Sigma^* \to \{0,1\}\) as \(f(w) = 1\) if and only if \(w \in L\). We consider the set of languages and the set of decision problems to be the same set of objects.

It is important to get used to this correspondence since we will often switch between talking about languages and talking about decision problems without explicit notice.

When talking about decision/function problems or languages, if the alphabet is not specified, it is assumed to be the binary alphabet \(\Sigma = \{{\texttt{0}}, {\texttt{1}}\}\).

You may be wondering why we make the definition of a language rather than always viewing a decision problem as a function from \(\Sigma^*\) to \(\{0,1\}\). The reason is that some operations and notation are more conveniently expressed using sets rather than functions, and therefore in certain situations, it can be nicer to think of decision problems as sets.

The reversal of a language \(L \subseteq \Sigma^*\), denoted \(L^R\), is the language \[L^R = \{w^R \in \Sigma^* : w \in L\}.\]

The concatenation of languages \(L_1, L_2 \subseteq \Sigma^*\), denoted \(L_1L_2\) or \(L_1 \cdot L_2\), is the language \[L_1L_2 = \{uv \in \Sigma^* : u \in L_1, v \in L_2\}.\]

For \(n \in \mathbb N\), the \(n\)’th power of a language \(L \subseteq \Sigma^*\), denoted \(L^n\), is the language obtained by concatenating \(L\) with itself \(n\) times, that is, \[L^n = \underbrace{L \cdot L \cdot L \cdots L}_{n \text{ times}}.\] Equivalently, \[L^n = \{u_1u_2\cdots u_n \in \Sigma^* : u_i \in L \text{ for all } i \in \{1,2,\ldots,n\}\}.\]

The star of a language \(L \subseteq \Sigma^*\), denoted \(L^*\), is the language \[L^* = \bigcup_{n \in \mathbb N} L^n.\] Equivalently, \[L^* = \{u_1u_2\cdots u_n \in \Sigma^* : n \in \mathbb N, u_i \in L \text{ for all } i \in \{1,2,\ldots,n\}\}.\] Note that we always have \(\epsilon\in L^*\). Sometimes we may prefer to not include \(\epsilon\) by default, so we define \[L^+ = \bigcup_{n \in \mathbb N^+} L^n.\]

Prove or disprove: If \(L_1, L_2 \subseteq \{{\texttt{a}},{\texttt{b}}\}^*\) are languages, then \((L_1 \cap L_2)^* = L_1^* \cap L_2^*\).

Prove or disprove the following:

If \(A, B, C \subseteq \{{\texttt{a}},{\texttt{b}}\}^*\) are languages, then \(A(B \cup C) = AB \cup AC\).

If \(A, B, C \subseteq \{{\texttt{a}},{\texttt{b}}\}^*\) are languages, then \(A(B \cap C) = AB \cap AC\).

Is it true that for any language \(L\), \((L^*)^R = (L^R)^*\)? Prove your answer.

Prove or disprove: For any language \(L\), \(L^* = (L^*)^*\).

We denote by \(\mathsf{ALL}\) the set of all languages (over the default alphabet \(\Sigma = \{{\texttt{0}}, {\texttt{1}}\}\)).

Given an alphabet \(\Sigma\), what is the definition of \(\Sigma^*\)?

What is the definition of a language?

Is \(\varnothing\) a language? If it is, what is the decision problem corresponding to it?

Is \(\Sigma^*\) a language? If it is, what is the decision problem corresponding to it?

Given two languages \(L_1\) and \(L_2\), what are the definitions of \(L_1L_2\) and \(L_1^*\)?

True or false: The language \(\{{\texttt{011}}, {\texttt{101}}, {\texttt{110}}\}^*\) is the set of all binary strings containing twice as many \({\texttt{1}}\)’s as \({\texttt{0}}\)’s.

True or false: The set \(\{\varnothing\}\) is a language, where \(\varnothing\) denotes the empty set.

True or false: An infinite language must contain infinite-length strings.

True or false: A language can contain infinite-length strings.

Define finite languages \(L_1\) and \(L_2\) such that \(|L_1L_2| < |L_1||L_2|\).

Define finite languages \(L_1\) and \(L_2\) such that \(|L_1L_2| = |L_1||L_2|\).

True or false: For any language \(L\) and any \(n \in \mathbb N\), we have \(L^n \subseteq L^{n+1}\).

True or false: For any language \(L\) and any \(n \in \mathbb N\), we have \(L^n \subseteq L^*\).

Let \(\Sigma\) be an alphabet. For which languages \(L \subseteq \Sigma^*\) is it true that \(L\cdot L\) is infinite?

Let \(\Sigma\) be an alphabet. For which languages \(L \subseteq \Sigma^*\) is it true that \(L^*\) is infinite?

What is the definition of an encodable set? Which sets are encodable? How do we deal with sets that are not encodable?

What is the motivation behind defining an encoding as a mapping to finite-length strings as opposed to both finite and infinite-length strings?

What is the definition of a function problem? Give one example of a function problem.

What is the definition of a decision problem? Give one example of a decision problem.

Briefly explain the correspondence between decision problems and languages.

Write down the primality testing decision problem as a language.

Why do we focus on decision problems in theoretical computer science?

Here are the important things to keep in mind from this chapter.

All the definitions and the notation introduced in this are fundamental, and we will use them liberally without reminders. As we move to more advanced topics, it is important that the definitions in this chapter do not add to the mental burden and make things overwhelming for you. Even when you are totally comfortable with the definitions, we will be covering concepts that are quite challenging. Therefore, you want to be able to spend all your mental energy on digesting the new material without getting confused by the definitions and terminology from previous chapters. If you invest the time to really understanding the definitions now, it will save you time later.

There is only one data type in theoretical computer science: strings. Every other type of data (e.g. numbers, images, graphs, videos) can be encoded with strings. Algorithms/computers work with these encodings.

A decision problem is a special kind of function problem. In a decision problem, for every possible input, the output is either True or False. Our focus for the next few chapters will be on decision problems.

There is a one-to-one correspondence between decision problems and languages. It is important to be able to easily switch between a language and the corresponding decision problem in your mind.

Anything that processes information can be called a computer. However, there can be restrictions on how the information can be processed (either universal restrictions imposed by, say, the laws of physics, or restrictions imposed by the particular setting we are interested in).

A computational model is a set of allowed rules for information processing. Given a computational model, we can talk about the computers (or machines) allowed by the computational model. A computer is a specific instantiation of the computational model, or in other words, it is a specific collection of information processing rules allowed by the computational model.

Note that even though the terms “computer” and “machine” suggest a physical device, in this course we are not interested in physical representations that realize computers, but rather in the mathematical representations. The term algorithm is synonymous to computer (and machine) and makes it more clear that we are not necessarily talking about a physical device.

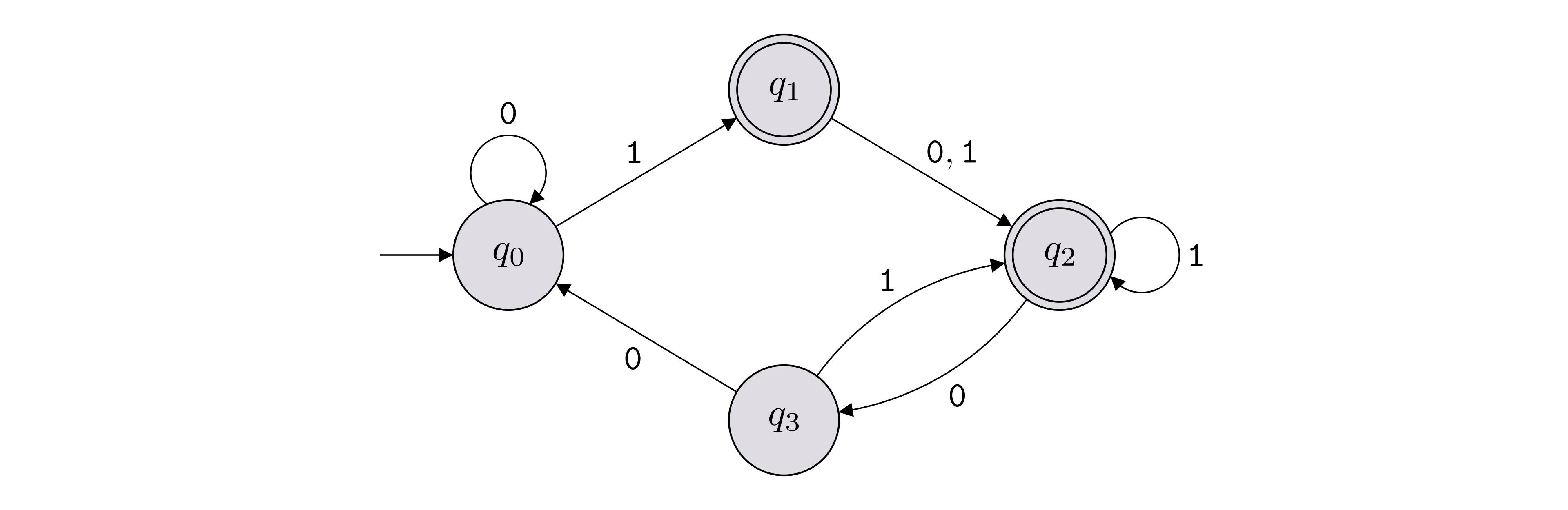

A deterministic finite automaton (DFA) \(M\) is a \(5\)-tuple \[M = (Q, \Sigma, \delta, q_0, F),\] where

\(Q\) is a non-empty finite set (which we refer to as the set of states of the DFA);

\(\Sigma\) is a non-empty finite set (which we refer to as the alphabet of the DFA);

\(\delta\) is a function of the form \(\delta: Q \times \Sigma \to Q\) (which we refer to as the transition function of the DFA);

\(q_0 \in Q\) is an element of \(Q\) (which we refer to as the start state of the DFA);

\(F \subseteq Q\) is a subset of \(Q\) (which we refer to as the set of accepting states of the DFA).

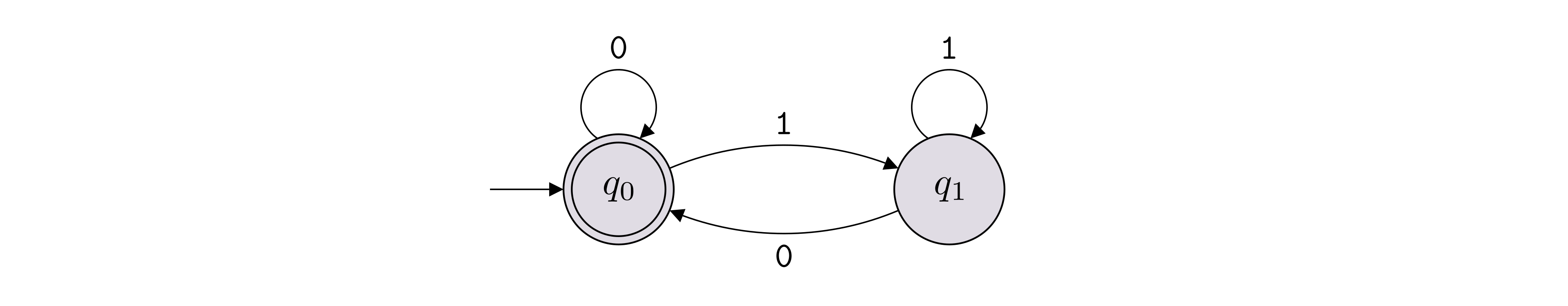

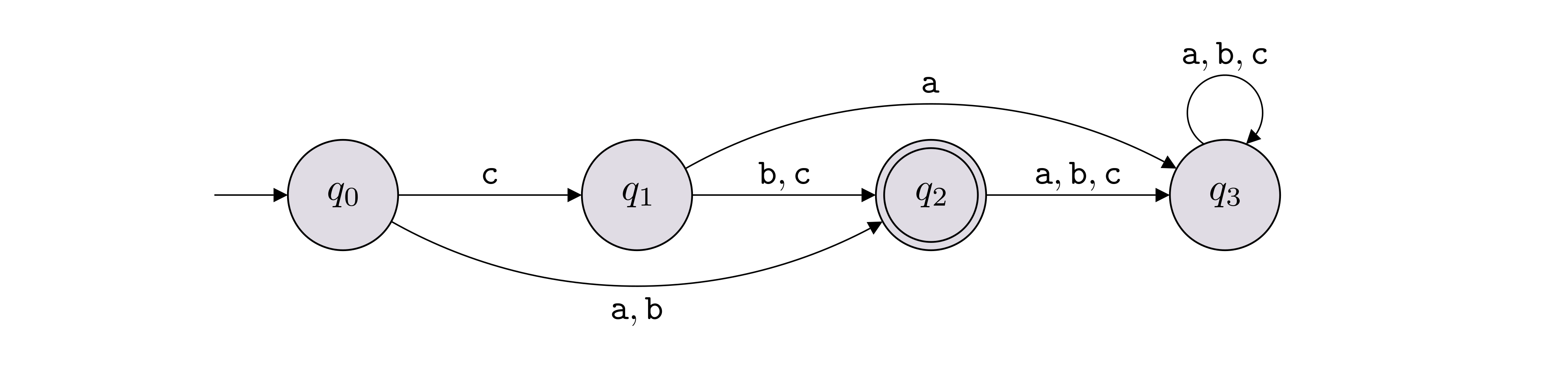

It is very common to represent a DFA with a state diagram. Below is an example of how we draw a state diagram of a DFA:

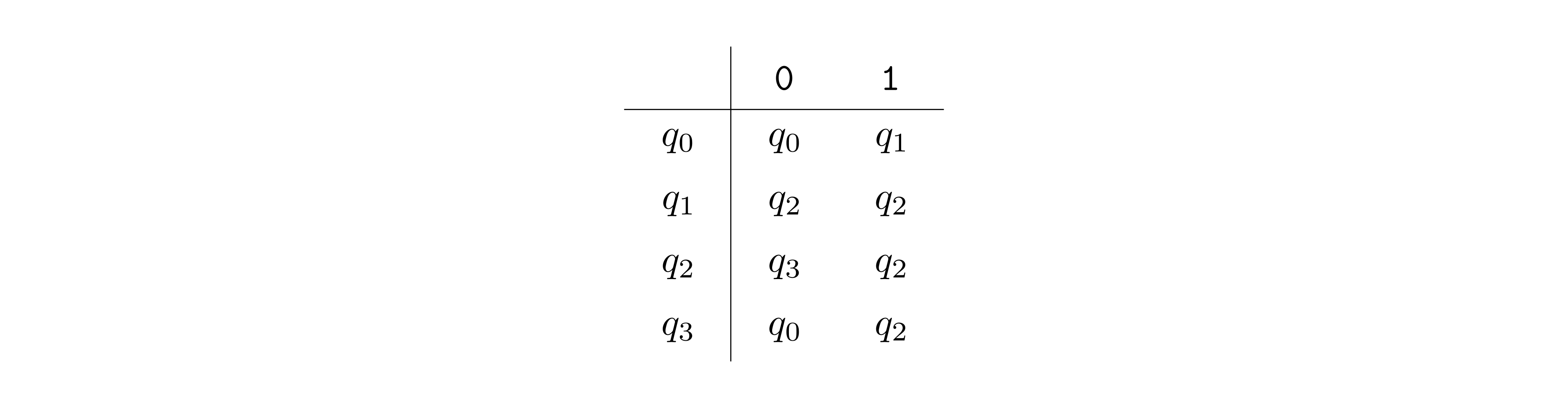

In this example, \(\Sigma = \{{\texttt{0}},{\texttt{1}}\}\), \(Q = \{q_0,q_1,q_2,q_3\}\), \(F = \{q_1,q_2\}\). The labeled arrows between the states encode the transition function \(\delta\), which can also be represented with a table as shown below (row \(q_i \in Q\) and column \(b \in \Sigma\) contains \(\delta(q_i, b)\)).

The start state is labeled with \(q_0\), but if another label is used, we can identify the start state in the state diagram by identifying the state with an arrow that does not originate from another state and goes into that state.

In the definition above, we used the labels \(Q, \Sigma, \delta, q_0, F\). One can of course use other labels when defining a DFA as long as it is clear what each label represents.

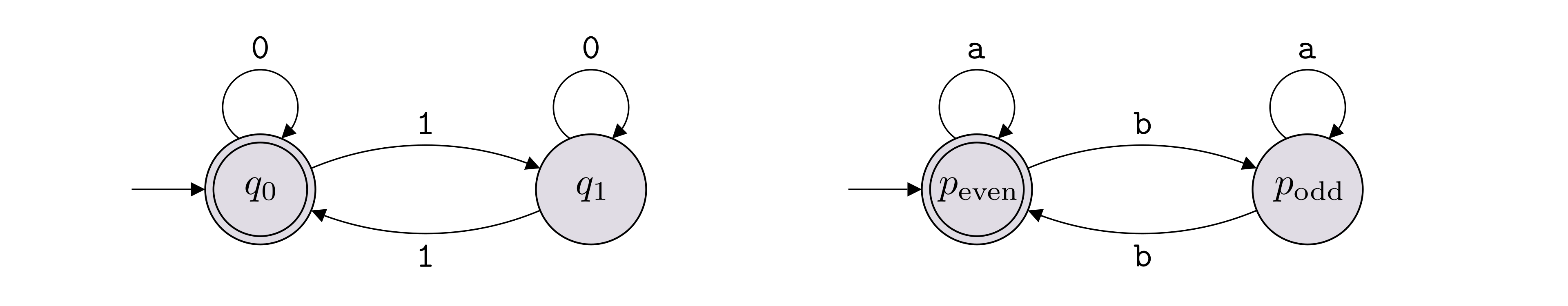

We’ll consider two DFAs to be equivalent/same if they are the same machine up to renaming the elements of the sets \(Q\) and \(\Sigma\). For instance, the two DFAs below are considered to be the same even though they have different labels on the states and use different alphabets.

The flexibility with the choice of labels allows us to be more descriptive when we define a DFA. For instance, we can give labels to the states that communicate the “purpose” or “role” of each state.

Let \(M = (Q, \Sigma, \delta, q_0, F)\) be a DFA and let \(w = w_1w_2 \cdots w_n\) be a string over an alphabet \(\Sigma\) (so \(w_i \in \Sigma\) for each \(i \in \{1,2,\ldots,n\}\)). The computation path of \(M\) with respect to \(w\) is a specific sequence of states \(r_0, r_1, r_2, \ldots , r_n\) (where each \(r_i \in Q\)). We’ll often write this sequence as follows.

| \(w_1\) | \(w_2\) | \(\ldots\) | \(w_n\) | |

|---|---|---|---|---|

| \(r_0\) | \(r_1\) | \(r_2\) | \(\ldots\) | \(r_n\) |

These states correspond to the states reached when the DFA reads the input \(w\) one character at a time. This means \(r_0 = q_0\), and \[\forall i \in \{1,2,\ldots,n\}, \quad \delta(r_{i-1},w_i) = r_i.\] An accepting computation path is such that \(r_n \in F\), and a rejecting computation path is such that \(r_n \not\in F\).

We say that \(M\) accepts a string \(w \in \Sigma^*\) (or “\(M(w)\) accepts”, or “\(M(w) = 1\)”) if the computation path of \(M\) with respect to \(w\) is an accepting computation path. Otherwise, we say that \(M\) rejects the string \(w\) (or “\(M(w)\) rejects”, or “\(M(w) = 0\)”).

Let \(M = (Q, \Sigma, \delta, q_0, F)\) be a DFA. The transition function \(\delta : Q \times \Sigma \to Q\) extends to \(\delta^* : Q \times \Sigma^* \to Q\), where \(\delta^*(q, w)\) is defined as the state we end up in if we start at \(q\) and read the string \(w = w_1 \ldots w_n\). Or in other words, \[\delta^*(q, w) = \delta(\ldots\delta(\delta(\delta(q,w_1),w_2),w_3)\ldots, w_n).\] The star in the notation can be dropped and \(\delta\) can be overloaded to represent both a function \(\delta : Q \times \Sigma \to Q\) and a function \(\delta : Q \times \Sigma^* \to Q\). Using this notation, a word \(w\) is accepted by the DFA \(M\) if \(\delta(q_0, w) \in F\).

Let \(f: \Sigma^* \to \{0,1\}\) be a decision problem and let \(M\) be a DFA over the same alphabet. We say that \(M\) solves (or decides, or computes) \(f\) if the input/output behavior of \(M\) matches \(f\) exactly, in the following sense: for all \(w \in \Sigma^*\), \(M(w) = f(w)\).

If \(L\) is the language corresponding to \(f\), the above definition is equivalent to saying that \(M\) solves (or decides, or computes) \(L\) if the following holds:

if \(w \in L\), then \(M\) accepts \(w\) (i.e. \(M(w) = 1\));

if \(w \not \in L\), then \(M\) rejects \(w\) (i.e. \(M(w) = 0\)).

For each DFA \(M\) below, define \(L(M)\).

For each language below (over the alphabet \(\Sigma = \{{\texttt{0}},{\texttt{1}}\}\)), draw a DFA solving it.

\(\{{\texttt{101}}, {\texttt{110}}\}\)

\(\{{\texttt{0}},{\texttt{1}}\}^* \setminus \{{\texttt{101}}, {\texttt{110}}\}\)

\(\{x \in \{{\texttt{0}},{\texttt{1}}\}^* : \text{$x$ starts and ends with the same bit}\}\)

\(\{{\texttt{110}}\}^* = \{\epsilon, {\texttt{110}}, {\texttt{110110}}, {\texttt{110110110}}, \ldots\}\)

\(\{x \in \{{\texttt{0}},{\texttt{1}}\}^*: \text{$x$ contains ${\texttt{110}}$ as a substring}\}\)

Let \(L\) be a finite language, i.e., it contains a finite number of words. At a high level, describe why there is a DFA solving \(L\).

A language \(L \subseteq \Sigma^*\) is called a regular language if there is a deterministic finite automaton \(M\) that solves \(L\).

Let \(\Sigma = \{{\texttt{0}},{\texttt{1}}\}\). Is the following language regular? \[L = \{w \in \{{\texttt{0}},{\texttt{1}}\}^* : w \text{ has an equal number of occurrences of ${\texttt{01}}$ and ${\texttt{10}}$ as substrings}\}\]

We denote by \(\mathsf{REG}\) the set of all regular languages (over the default alphabet \(\Sigma = \{{\texttt{0}}, {\texttt{1}}\}\)).

Let \(\Sigma = \{{\texttt{0}},{\texttt{1}}\}\). The language \(L = \{{\texttt{0}}^n {\texttt{1}}^n: n \in \mathbb N\}\) is not regular.

In the proof of the above theorem, we defined the set \(P = \{{\texttt{0}}^n : n \in \{0,1,\ldots,k\}\}\) and then applied the pigeonhole principle. Explain why picking the following sets would not have worked in that proof.

\(P = \{{\texttt{1}}^n : n \in \{1,2,\ldots,k+1\}\}\)

\(P = \{{\texttt{1}}, {\texttt{11}}, {\texttt{000}}, {\texttt{0000}}, {\texttt{00000}}, \ldots, {\texttt{0}}^{k+1}\}\)

Let \(\Sigma = \{{\texttt{a}},{\texttt{b}},{\texttt{c}}\}\). Prove that \(L = \{{\texttt{c}}^{251} {\texttt{a}}^n {\texttt{b}}^{2n}: n \in \mathbb N\}\) is not regular.

In Exercise (Would any set of pigeons work?) we saw that the “pigeon set” that we use to apply the pigeonhole principle must be chosen carefully. We’ll call a pigeon set a fooling pigeon set if it is a pigeon set that “works”. That is, given a DFA with \(k\) states that is assumed to solve a non-regular \(L\), a fooling pigeon set of size \(k+1\) allows us to carry out the contradiction proof, and conclude that \(L\) is non-regular. Identify the property that a fooling pigeon set should have.

Let \(\Sigma = \{{\texttt{a}}\}\). The language \(L = \{{\texttt{a}}^{2^n}: n \in \mathbb N\}\) is not regular.

In the next exercise, we’ll write the proof in a slightly different way to offer a slightly different perspective. In particular, we’ll phrase the proof such that the goal is to show no DFA solving \(L\) can have a finite number of states. This is done by identifying an infinite set of strings that all must end up in a different state (this is the fooling pigeon set that we defined in Exercise (A fooling pigeon set)). Once you have a set of strings \(S\) such that every string in \(S\) must end up in a different state, we can conclude any DFA solving the language must have at least \(|S|\) states.

This slight change in phrasing of the non-regularity proof makes it clear that even if \(L\) is regular, the technique can be used to prove a lower bound on the number of states of any DFA solving \(L\).

Let \(\Sigma = \{{\texttt{a}},{\texttt{b}},{\texttt{c}}\}\). Prove that \(L = \{{\texttt{a}}^n{\texttt{b}}^n{\texttt{c}}^n: n \in \mathbb N\}\) is not regular.

Is it true that if \(L\) is regular, then its complement \(\Sigma^* \setminus L\) is also regular? In other words, are regular languages closed under the complementation operation?

Is it true that if \(L \subseteq \Sigma^*\) is a regular language, then any \(L' \subseteq L\) is also a regular language?

Let \(\Sigma\) be some finite alphabet. If \(L_1 \subseteq \Sigma^*\) and \(L_2 \subseteq \Sigma^*\) are regular languages, then the language \(L_1 \cup L_2\) is also regular.

Let \(\Sigma\) be some finite alphabet. If \(L_1 \subseteq \Sigma^*\) and \(L_2 \subseteq \Sigma^*\) are regular languages, then the language \(L_1 \cap L_2\) is also regular.

Give a direct proof (without using the fact that regular languages are closed under complementation, union and intersection) that if \(L_1\) and \(L_2\) are regular languages, then \(L_1 \setminus L_2\) is also regular.

Suppose \(L_1, \ldots, L_k\) are all regular languages. Is it true that their union \(\bigcup_{i=0}^k L_i\) must be a regular language?

Suppose \(L_0, L_1, L_2, \ldots\) is an infinite sequence of regular languages. Is it true that their union \(\bigcup_{i\geq 0} L_i\) must be a regular language?

Suppose \(L_1\) and \(L_2\) are not regular languages. Is it always true that \(L_1 \cup L_2\) is not a regular language?

Suppose \(L \subseteq \Sigma^*\) is a regular language. Show that the following languages are also regular: \[\begin{aligned} \text{SUFFIXES}(L) & = \{x \in \Sigma^* : \text{$yx \in L$ for some $y \in \Sigma^*$}\}, \\ \text{PREFIXES}(L) & = \{y \in \Sigma^* : \text{$yx \in L$ for some $x \in \Sigma^*$}\}.\end{aligned}\]

If \(L_1, L_2 \subseteq \Sigma^*\) are regular languages, then the language \(L_1L_2\) is also regular.

Let \(M = (Q, \Sigma, \delta, q_0, F)\) be a DFA. We define the generalized transition function \(\delta_\wp: \wp(Q) \times \Sigma \to \wp(Q)\) as follows. For \(S \subseteq Q\) and \(\sigma \in \Sigma\), \[\delta_{\wp}(S, \sigma) = \{\delta(q, \sigma) : q \in S\}.\]

In the proof of Theorem (Regular languages are closed under concatenation), we defined the set of states for the DFA solving \(L_1L_2\) as \(Q'' = Q \times \wp(Q')\). Construct a DFA for \(L_1L_2\) in which \(Q''\) is equal to \(\wp(Q \cup Q')\), i.e., specify how \(\delta''\), \(q_0''\) and \(F''\) should be defined with respect to \(Q'' = \wp(Q \cup Q')\). Proof of correctness is not required.

Critique the following argument that claims to establish that regular languages are closed under the star operation, that is, if \(L\) is a regular language, then so is \(L^*\).

Let \(L\) be a regular language. We know that by definition \(L^* = \bigcup_{n \in \mathbb N} L^n\), where \[L^n = \{u_1 u_2 \ldots u_n : u_i \in L \text{ for all $i$} \}.\] We know that for all \(n\), \(L^n\) must be regular using Theorem (Regular languages are closed under concatenation). And since \(L^n\) is regular for all \(n\), we know \(L^*\) must be regular using Theorem (Regular languages are closed under union).

Show that regular languages are closed under the star operation as follows: First show that if \(L\) is regular, then so is \(L^+\), which is defined as the union \[L^+ = \bigcup_{n \in \mathbb N^+} L^n.\] For this part, given a DFA for \(L\), show how to construct a DFA for \(L^+\). A proof of correctness is not required. In order to conclude that \(L^*\) is regular, observe that \(L^* = L^+ \cup \{\epsilon\}\), and use the fact that regular languages are closed under union.

What are the \(5\) components of a DFA?

Let \(M\) be a DFA. What does \(L(M)\) denote?

Draw a DFA solving \(\varnothing\). Draw a DFA solving \(\Sigma^*\).

True or false: Given a language \(L\), there is at most one DFA that solves it.

Fix some alphabet \(\Sigma\). How many DFAs are there with exactly one state?

True or false: For any DFA, all the states are “reachable”. That is, if \(D = (Q, \Sigma, \delta, q_0, F)\) is a DFA and \(q \in Q\) is one of its states, then there is a string \(w \in \Sigma^*\) such that \(\delta^*(q_0,w) = q\).

What is the set of all possible inputs for a DFA \(D = (Q, \Sigma, \delta, q_0, F)\)?

Consider the set of all DFAs with \(k\) states over the alphabet \(\Sigma =\{{\texttt{a}}\}\) such that all states are reachable from \(q_0\). What is the cardinality of this set?

What is the definition of a regular language?

Describe the general strategy (the high level steps) for showing that a language is not regular.

Give \(3\) examples of non-regular languages.

Let \(L \subseteq \{{\texttt{a}}\}^*\) be a language consisting of all strings of \({\texttt{a}}\)’s of odd length except length \(251\). Is \(L\) regular?

Let \(L\) be the set of all strings in \(\{{\texttt{0}},{\texttt{1}}\}^*\) that contain at least \(251\) \({\texttt{0}}\)’s and at most \(251\) \({\texttt{1}}\)’s. Is \(L\) regular?

Suppose you are given a regular language \(L\) and you are asked to prove that any DFA solving \(L\) must contain at least \(k\) states (for some \(k\) value given to you). What is a general proof strategy for establishing such a claim?

Let \(M = (Q, \Sigma, \delta, q_0, F)\) be a DFA solving a language \(L\) and let \(M' = (Q', \Sigma, \delta', q'_0, F')\) be a DFA solving a language \(L'\). Describe the \(5\) components of a DFA solving \(L \cup L'\).

True or false: Let \(L_1 \oplus L_2\) denote the set of all words in either \(L_1\) or \(L_2\), but not both. If \(L_1\) and \(L_2\) are regular, then so is \(L_1 \oplus L_2\).

True or false: For languages \(L\) and \(L'\), if \(L \subseteq L'\) and \(L\) is non-regular, then \(L'\) is non-regular.

True or false: If \(L \subseteq \Sigma^*\) is non-regular, then \(\overline{L} = \Sigma^* \setminus L\) is non-regular.

True or false: If \(L_1, L_2 \subseteq \Sigma^*\) are non-regular languages, then so is \(L_1 \cup L_2\).

True or false: Let \(L\) be a non-regular language. There exists \(K \subset L\), \(K \neq L\), such that \(K\) is also non-regular.

By definition a DFA has finitely many states. What is the motivation/reason for this restriction?

Consider the following decision problem: Given as input a DFA, output True if and only if there exists some string \(s \in \Sigma^*\) that the DFA accepts. Write the language corresponding to this decision problem using mathematical notation, and in particular, using set builder notation.

Here are the important things to keep in mind from this chapter.

Given a DFA, describe in English the language that it solves.

Given the description of a regular language, come up with a DFA that solves it.

Given a non-regular language, prove that it is indeed non-regular. Make sure you are not just memorizing a template for these types of proofs, but that you understand all the details of the strategy being employed. Apply the Feynman technique and see if you can teach this kind of proof to someone else.

In proofs establishing a closure property of regular languages, you start with one or more DFAs, and you construct a new one from them. In order to build the new DFA successfully, you have to be comfortable with the 5-tuple notation of a DFA. The constructions can get notation-heavy (with power sets and Cartesian products), but getting comfortable with mathematical notation is one of our learning objectives.

With respect to closure properties of regular languages, prioritize having a very solid understanding of closure under the union operation before you move to the more complicated constructions for the concatenation operation and star operation.

A Turing machine (TM) \(M\) is a 7-tuple \[M = (Q, \Sigma, \Gamma, \delta, q_0, q_\text{acc}, q_\text{rej}),\] where

\(Q\) is a non-empty finite set

(which we refer to as the set of states of the TM);\(\Sigma\) is a non-empty finite set that does not contain the blank symbol \(\sqcup\)

(which we refer to as the input alphabet of the TM);\(\Gamma\) is a finite set such that \(\sqcup\in \Gamma\) and \(\Sigma \subset \Gamma\)

(which we refer to as the tape alphabet of the TM);\(\delta\) is a function of the form \(\delta: Q \times \Gamma \to Q \times \Gamma \times \{\text{L}, \text{R}\}\)

(which we refer to as the transition function of the TM);\(q_0 \in Q\) is an element of \(Q\)

(which we refer to as the initial state of the TM or the starting state of the TM);\(q_\text{acc}\in Q\) is an element of \(Q\)

(which we refer to as the accepting state of the TM);\(q_\text{rej}\in Q\) is an element of \(Q\) such that \(q_\text{rej}\neq q_\text{acc}\)

(which we refer to as the rejecting state of the TM).

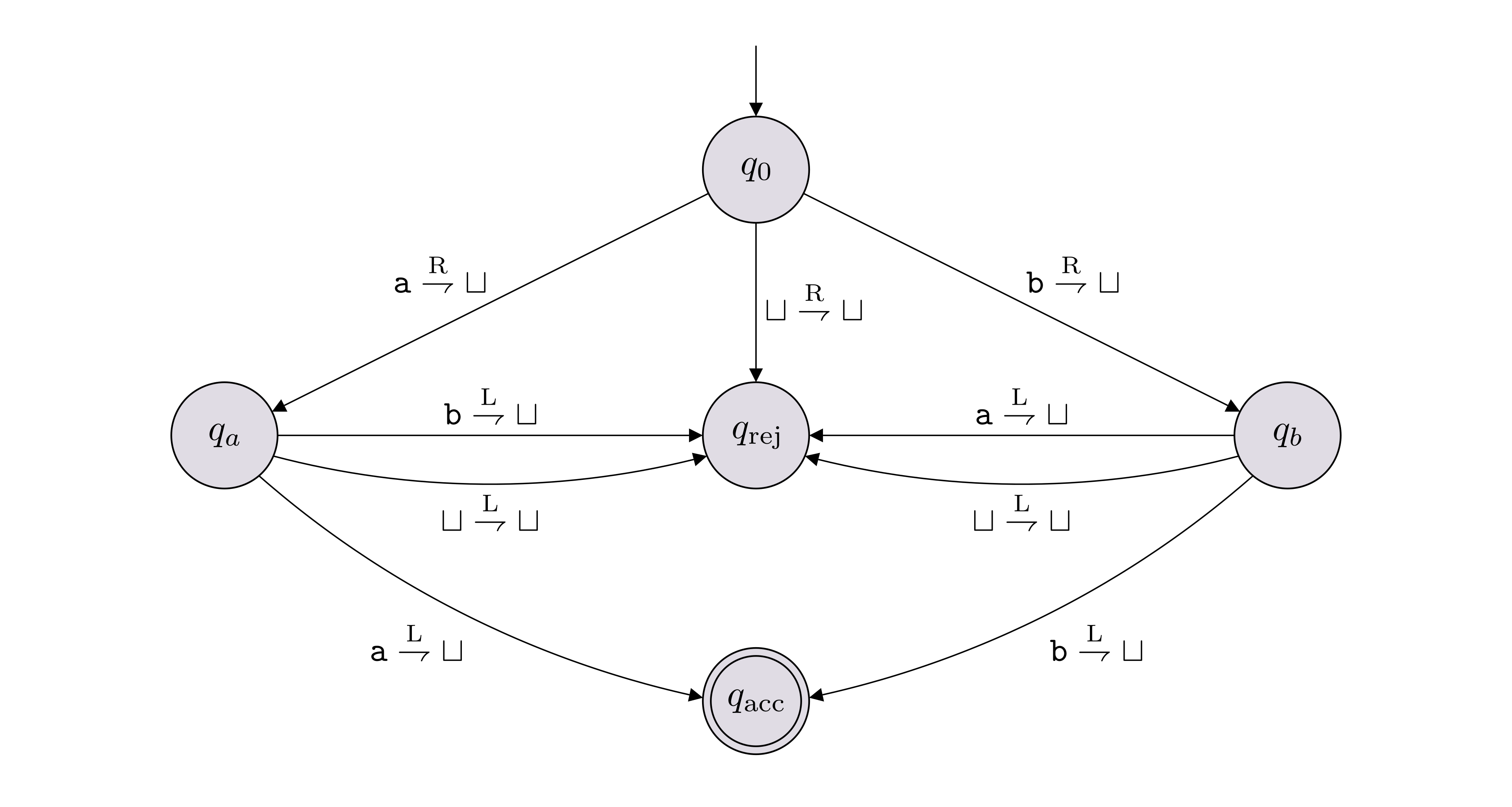

Below is an example of a state diagram of a TM with \(5\) states:

In this example, \(\Sigma = \{{\texttt{a}},{\texttt{b}}\}\), \(\Gamma = \{{\texttt{a}},{\texttt{b}},\sqcup\}\), \(Q = \{q_0,q_a,q_b,q_\text{acc},q_\text{rej}\}\). The labeled arrows between the states encode the transition function \(\delta\). As an example, the label on the arrow from \(q_0\) to \(q_a\) is \({\texttt{a}} \overset{\text{R}}{\rightharpoondown} \sqcup\), which represents \(\delta(q_0, {\texttt{a}}) = (q_a, \sqcup, \text{R})\)

A Turing machine is always accompanied by a tape that is used as memory. The tape is just a sequence of cells that can hold any symbol from the tape alphabet. The tape can be defined so that it is infinite in two directions (so we could imagine indexing the cells using the integers \(\mathbb Z\)), or it could be infinite in one direction, to the right (so we could imagine indexing the cells using the natural numbers \(\mathbb N\)). The input to a Turing machine is always a string \(w \in \Sigma^*\). The string \(w_1\ldots w_n \in \Sigma^*\) is put on the tape so that symbol \(w_i\) is placed on the cell with index \(i-1\). We imagine that there is a tape head that initially points to index 0. The symbol that the tape head points to at a particular time is the symbol that the Turing machine reads. The tape head moves left or right according to the transition function of the Turing machine. These details are explained in lecture.

In these notes, we assume our tape is infinite in two directions.

Given any DFA and any input string, the DFA always halts and makes a decision to either reject or accept the string. The same is not true for TMs and this is an important distinction between DFAs and TMs. It is possible that a TM does not make a decision when given an input string, and instead, loops forever. So given a TM \(M\) and an input string \(x\), there are 3 options when we run \(M\) on \(x\):

\(M\) accepts \(x\) (denoted \(M(x) = 1\));

\(M\) rejects \(x\) (denoted \(M(x) = 0\));

\(M\) loops forever (denoted \(M(x) = \infty\)).

The formal definitions for these 3 cases is given below.

Let \(M\) be a Turing machine where \(Q\) is the set of states, \(\sqcup\) is the blank symbol, and \(\Gamma\) is the tape alphabet. To understand how \(M\)’s computation proceeds we generally need to keep track of three things: (i) the state \(M\) is in; (ii) the contents of the tape; (iii) where the tape head is. These three things are collectively known as the “configuration” of the TM. More formally: a configuration for \(M\) is defined to be a string \(uqv \in (\Gamma \cup Q)^*\), where \(u, v \in \Gamma^*\) and \(q \in Q\). This represents that the tape has contents \(\cdots \sqcup\sqcup\sqcup uv \sqcup\sqcup\sqcup\cdots\), the head is pointing at the leftmost symbol of \(v\), and the state is \(q\). A configuration is an accepting configuration if \(q\) is \(M\)’s accept state and it is a rejecting configuration if \(q\) is \(M\)’s reject state.

Suppose that \(M\) reaches a certain configuration \(\alpha\) (which is not accepting or rejecting). Knowing just this configuration and \(M\)’s transition function \(\delta\), one can determine the configuration \(\beta\) that \(M\) will reach at the next step of the computation. (As an exercise, make this statement precise.) We write \[\alpha \vdash_M \beta\] and say that “\(\alpha\) yields \(\beta\) (in \(M\))”. If it is obvious what \(M\) we’re talking about, we drop the subscript \(M\) and just write \(\alpha \vdash \beta\).

Given an input \(x \in \Sigma^*\) we say that \(M(x)\) halts if there exists a sequence of configurations (called the computation path) \(\alpha_0, \alpha_1, \dots, \alpha_{T}\) such that:

\(\alpha_0 = q_0x\), where \(q_0\) is \(M\)’s initial state;

\(\alpha_t \vdash_M \alpha_{t+1}\) for all \(t = 0, 1, 2, \dots, T-1\);

\(\alpha_T\) is either an accepting configuration (in which case we say \(M(x)\) accepts) or a rejecting configuration (in which case we say \(M(x)\) rejects).

Otherwise, we say \(M(x)\) loops.

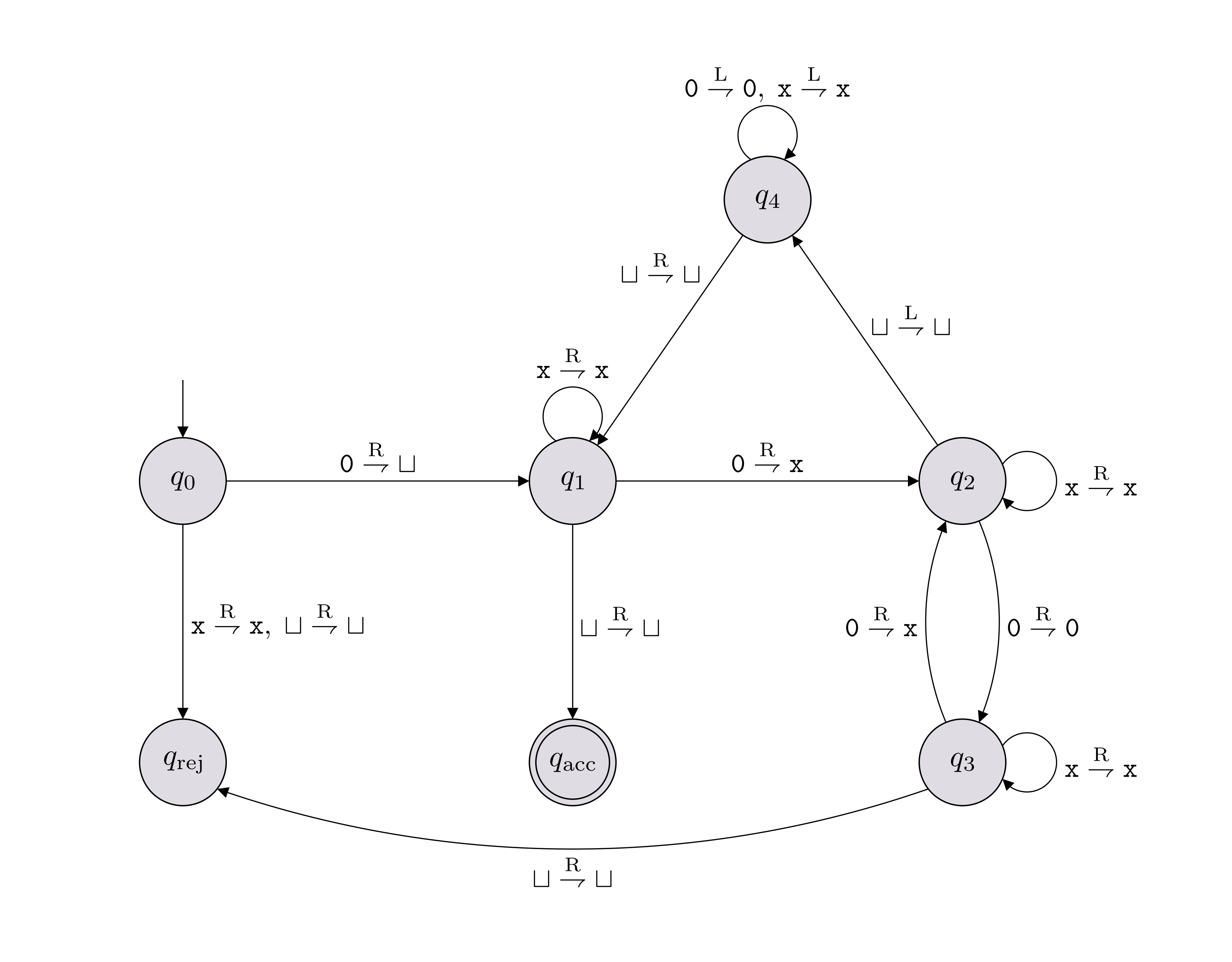

Let \(M\) denote the Turing machine shown below, which has input alphabet \(\Sigma = \{{\texttt{0}}\}\) and tape alphabet \(\Gamma = \{{\texttt{0}},{\texttt{x}}, \sqcup\}\).

Write out the computation path \[\alpha_0 \vdash_M \alpha_1 \vdash_M \cdots \vdash_M \alpha_T\] for \(M({\texttt{0000}})\) and determine whether it accepts or rejects.

Let \(f: \Sigma^* \to \{0,1\}\) be a decision problem and let \(M\) be a TM with input alphabet \(\Sigma\). We say that \(M\) solves (or decides, or computes) \(f\) if the input/output behavior of \(M\) matches \(f\) exactly, in the following sense: for all \(w \in \Sigma^*\), \(M(w) = f(w)\).

If \(L\) is the language corresponding to \(f\), the above definition is equivalent to saying that \(M\) solves (or decides, or computes) \(L\) if the following holds:

if \(w \in L\), then \(M\) accepts \(w\) (i.e. \(M(w) = 1\));

if \(w \not \in L\), then \(M\) rejects \(w\) (i.e. \(M(w) = 0\)).

If \(M\) solves a decision problem (language), \(M\) must halt on all inputs. Such a TM is called a decider.

The language of a TM \(M\) is \[L(M) = \{w \in \Sigma^* : \text{$M$ accepts $w$}\}.\] Given a TM \(M\), we cannot say that \(M\) solves/decides \(L(M)\) because \(M\) may loop forever for inputs \(w\) not in \(L(M)\). Only a decider TM can decide a language. And if \(M\) is indeed a decider, then \(L(M)\) is the unique language that \(M\) solves/decides.

Give a description of the language decided by the TM shown in Note (State diagram of a TM).

For each language below, draw the state diagram of a TM that decides the language. You can use any finite tape alphabet \(\Gamma\) containing the elements of \(\Sigma\) and the symbol \(\sqcup\).

\(L = \{{\texttt{0}}^n{\texttt{1}}^n : n \in \mathbb N\}\), where \(\Sigma = \{{\texttt{0}},{\texttt{1}}\}\).

\(L = \{{\texttt{0}}^{2^n} : n \in \mathbb N\}\), where \(\Sigma = \{{\texttt{0}}\}\).

A language \(L\) is called decidable (or computable) if there exists a TM that decides (i.e. solves) \(L\).

We denote by \(\mathsf{R}\) the set of all decidable languages (over the default alphabet \(\Sigma = \{{\texttt{0}}, {\texttt{1}}\}\)).

Let \(M\) be a TM with input alphabet \(\Sigma\). We say that on input \(x\), \(M\) outputs the string \(y\) if the following hold:

\(M(x)\) halts with the halting configuration being \(uqv\) (where \(q \in \{q_\text{acc}, q_\text{rej}\}\)),

the string \(uv\) equals \(y\).

In this case we write \(M(x) = y\).

We say \(M\) solves (or computes) a function problem \(f : \Sigma^* \to \Sigma^*\) if for all \(x \in \Sigma^*\), \(M(x) = f(x)\).

The Church-Turing Thesis (CTT) states that any computation that can be conducted in this universe (constrained by the laws of physics of course), can be carried out by a TM. In other words, the set of problems that are in principle computable in this universe is captured by the complexity class \(\mathsf{R}\).

There are a couple of important things to highlight. First, CTT says nothing about the efficiency of the simulation. Second, CTT is not a mathematical statement, but a physical claim about the universe we live in (similar to claiming that the speed of light is constant). The implications of CTT is far-reaching. For example, CTT claims that any computation that can be carried out by a human can be carried out by a TM. Other implications are discussed in lecture.

We will consider three different ways of describing TMs.

A low-level description of a TM is given by specifying the 7-tuple in its definition. This information is often presented using a picture of its state diagram.

A medium-level description is an English description of the movement and behavior of the tape head, as well as how the contents of the tape is changing, as the computation is being carried out.

A high-level description is pseudocode or an algorithm written in English. Usually, an algorithm is written in a way so that a human can read it, understand it, and carry out its steps. By CTT, there is a TM that can carry out the same computation.

Unless explicitly stated otherwise, you can present a TM using a high-level description. From now on, we will do the same.

Let \(L\) and \(K\) be decidable languages. Show that \(\overline{L} = \Sigma^* \setminus L\), \(L \cap K\) and \(L \cup K\) are also decidable by presenting high-level descriptions of TMs deciding them.

Fix \(\Sigma = \{{\texttt{3}}\}\) and let \(L \subseteq \{{\texttt{3}}\}^*\) be defined as follows: \(x \in L\) if and only if \(x\) appears somewhere in the decimal expansion of \(\pi\). For example, the strings \(\epsilon\), \({\texttt{3}}\), and \({\texttt{33}}\) are all definitely in \(L\), because \[\pi = 3.1415926535897932384626433\dots\] Prove that \(L\) is decidable. No knowledge in number theory is required to solve this question.

In Chapter 1 we saw that we can use the notation \(\left \langle \cdot \right\rangle\) to denote an encoding of objects belonging to any countable set. For example, if \(D\) is a DFA, we can write \(\left \langle D \right\rangle\) to denote the encoding of \(D\) as a string. If \(M\) is a TM, we can write \(\left \langle M \right\rangle\) to denote the encoding of \(M\). There are many ways one can encode DFAs and TMs. We will not be describing a specific encoding scheme as this detail will not be important for us.

Recall that when we want to encode a tuple of objects, we use the comma sign. For example, if \(M_1\) and \(M_2\) are two Turing machines, we write \(\left \langle M_1, M_2 \right\rangle\) to denote the encoding of the tuple \((M_1,M_2)\). As another example, if \(M\) is a TM and \(x \in \Sigma^*\), we can write \(\left \langle M, x \right\rangle\) to denote the encoding of the tuple \((M,x)\).

The fact that we can encode a Turing machine (or a piece of code) means that an input to a TM can be the encoding of another TM (in fact, a TM can take the encoding of itself as the input). This point of view has several important implications, one of which is the fact that we can come up with a Turing machine, which given as input the description of any Turing machine, can simulate it. This simulator Turing machine is called a universal Turing machine.

Let \(\Sigma\) be some finite alphabet. A universal Turing machine \(U\) is a Turing machine that takes \(\left \langle M, x \right\rangle\) as input, where \(M\) is a TM and \(x\) is a word in \(\Sigma^*\), and has the following high-level description:

\[\begin{aligned} \hline\hline\\[-12pt] &\textbf{def}\;\; U(\left \langle \text{TM } M, \; \text{string } x \right\rangle):\\ &{\scriptstyle 1.}~~~~\texttt{Simulate $M$ on input $x$ (i.e. run $M(x)$)}\\ &{\scriptstyle 2.}~~~~\texttt{If it accepts, accept.}\\ &{\scriptstyle 3.}~~~~\texttt{If it rejects, reject.}\\ \hline\hline\end{aligned}\]

Note that if \(M(x)\) loops forever, then \(U\) loops forever as well. To make sure \(M\) always halts, we can add a third input, an integer \(k\), and have the universal machine simulate the input TM for at most \(k\) steps.

Above, when denoting the encoding of a tuple \((M, x)\) where \(M\) is a Turing machine and \(x\) is a string, we used \(\left \langle \text{TM } M, \text{string } x \right\rangle\) to make clear what the types of \(M\) and \(x\) are. We will make use of this notation from now on and specify the types of the objects being referenced within the encoding notation.

When we give a high-level description of a TM, we often assume that the input given is of the correct form/type. For example, with the Universal TM above, we assumed that the input was the encoding \(\left \langle \text{TM } M, \; \text{string } x \right\rangle\). But technically, the input to the universal TM (and any other TM) is allowed to be any finite-length string. What do we do if the input string does not correspond to a valid encoding of an expected type of input object?

Even though this is not explicitly written, we will implicitly assume that the first thing our machine does is check whether the input is a valid encoding of objects with the expected types. If it is not, the machine rejects. If it is, then it will carry on with the specified instructions.

The important thing to keep in mind is that in our descriptions of Turing machines, this step of checking whether the input string has the correct form (i.e. that it is a valid encoding) will never be explicitly written, and we don’t expect you to explicitly write it either. That being said, be aware that this check is implicitly there.

Fix some alphabet \(\Sigma\).

We call a DFA \(D\) satisfiable if there is some input string that \(D\) accepts. In other words, \(D\) is satisfiable if \(L(D) \neq \varnothing\).

We say that a DFA \(D\) self-accepts if \(D\) accepts the string \(\left \langle D \right\rangle\). In other words, \(D\) self-accepts if \(\left \langle D \right\rangle \in L(D)\).

We define the following languages: \[\begin{aligned} \text{ACCEPTS}_{\text{DFA}}& = \{\left \langle D,x \right\rangle : \text{$D$ is a DFA that accepts the string $x$}\}, \\ \text{SA}_{\text{DFA}}= \text{SELF-ACCEPTS}_{\text{DFA}}& = \{\left \langle D \right\rangle : \text{$D$ is a DFA that self-accepts}\}, \\ \text{SAT}_{\text{DFA}}& = \{\left \langle D \right\rangle : \text{$D$ is a satisfiable DFA} \}, \\ \text{NEQ}_{\text{DFA}}& = \{ \left \langle D_1, D_2 \right\rangle : \text{$D_1$ and $D_2$ are DFAs with $L(D_1) \neq L(D_2)$} \}.\end{aligned}\]

The languages \(\text{ACCEPTS}_{\text{DFA}}\) and \(\text{SA}_{\text{DFA}}\) are decidable.

The language \(\text{SAT}_{\text{DFA}}\) is decidable.

The language \(\text{NEQ}_{\text{DFA}}\) is decidable.

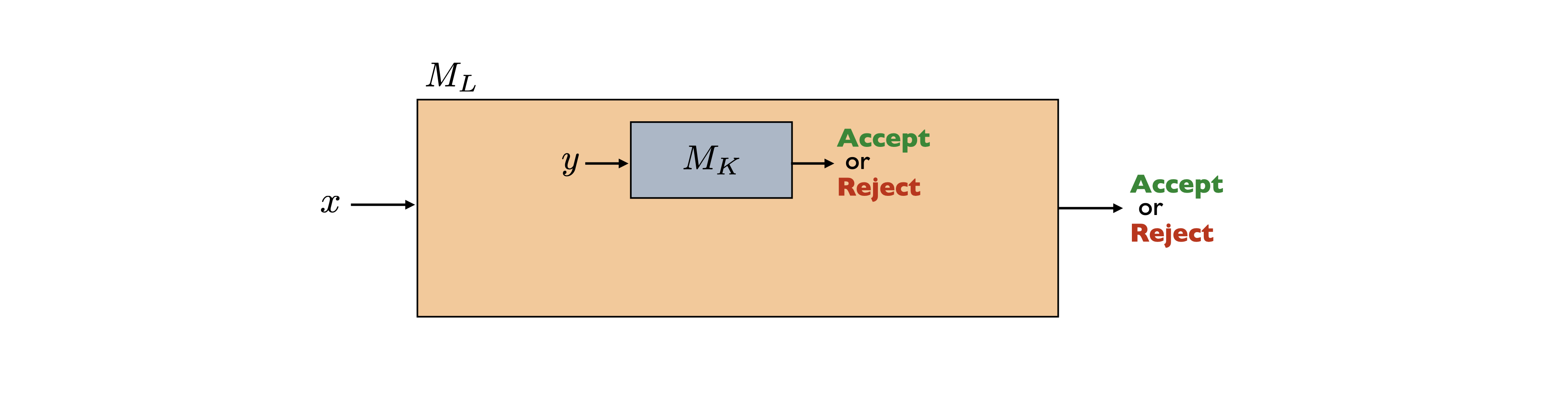

Suppose \(L\) and \(K\) are two languages and \(K\) is decidable. We say that solving \(L\) reduces to solving \(K\) if given a decider \(M_K\) for \(K\), we can construct a decider for \(L\) that uses \(M_K\) as a subroutine (i.e. helper function), thereby establishing \(L\) is also decidable. For example, the proof of Theorem (\(\text{NEQ}_{\text{DFA}}\) is decidable) shows that solving \(\text{NEQ}_{\text{DFA}}\) reduces to solving \(\text{SAT}_{\text{DFA}}\).

A reduction is conceptually straightforward but also a powerful tool to expand the landscape of decidable languages.

Let \(L = \{\left \langle D_1, D_2 \right\rangle: \text{$D_1$ and $D_2$ are DFAs with $L(D_1) \subsetneq L(D_2)$}\}\). Show that \(L\) is decidable.

Let \(K = \{\left \langle D \right\rangle: \text{$D$ is a DFA that accepts $w^R$ whenever it accepts $w$}\}\), where \(w^R\) denotes the reversal of \(w\). Show that \(K\) is decidable. For this problem, you can use the fact that given a DFA \(D\), there is an algorithm to construct a DFA \(D'\) such that \(L(D') = L(D)^R = \{w^R : w \in L(D)\}\).

We can relax the notion of a TM \(M\) solving a language \(L\) in the following way. We say that \(M\) semi-decides \(L\) if for all \(w \in \Sigma^*\), \[w \in L \quad \Longleftrightarrow \quad M(w) \text{ accepts}.\] Equivalently,

if \(w \in L\), then \(M\) accepts \(w\) (i.e. \(M(w) = 1\));

if \(w \not \in L\), then \(M\) does not accept \(w\) (i.e. \(M(w) \in \{0,\infty\}\)).

(Note that if \(w \not \in L\), \(M(w)\) may loop forever.)

We call \(M\) a semi-decider for \(L\).

A language \(L\) is called semi-decidable if there exists a TM that semi-decides \(L\).

We denote by \(\mathsf{RE}\) the set of all semi-decidable languages (over the default alphabet \(\Sigma = \{{\texttt{0}}, {\texttt{1}}\}\)).

A language \(L\) is decidable if and only if both \(L\) and \(\overline{L} = \Sigma^* \setminus L\) are semi-decidable.

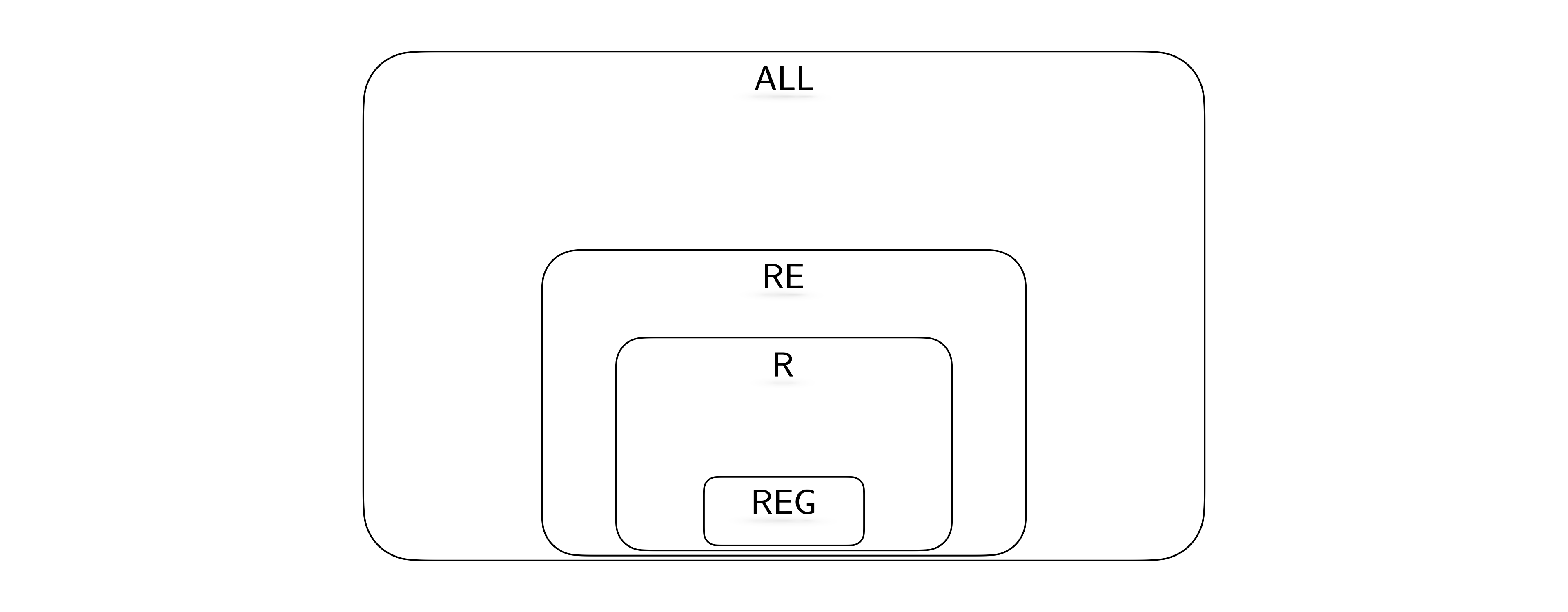

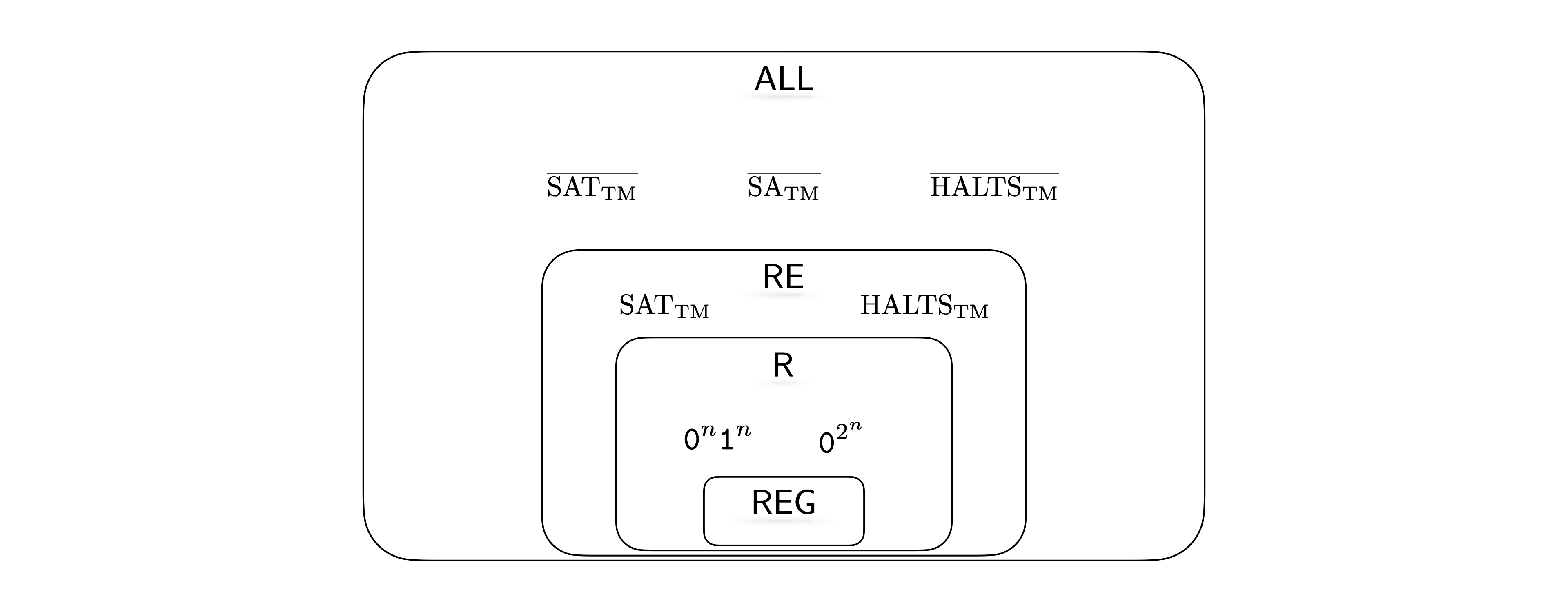

Observe that any regular language is decidable since for every DFA, we can construct a TM with the same behavior (we ask you to make this precise as an exercise below). Also observe that if a language is decidable, then it is also semi-decidable. Based on these observations, we know \(\mathsf{REG}\subseteq \mathsf{R}\subseteq \mathsf{RE}\subseteq \mathsf{ALL}\).

Furthermore, we know \(\{{\texttt{0}}^n {\texttt{1}}^n : n \in \mathbb N\}\) is decidable, but not regular. Therefore we know that the first inclusion above is strict. We will next investigate whether the other inclusions are strict or not.

What are the \(7\) components in the formal definition of a Turing machine?

True or false: It is possible that in the definition of a TM, \(\Sigma = \Gamma\), where \(\Sigma\) is the input alphabet, and \(\Gamma\) is the tape alphabet.

True or false: On every valid input, any TM either accepts or rejects.

In the \(7\)-tuple definition of a TM, the tape does not appear. Why is this the case?

What is the set of all possible inputs for a TM \(M = (Q, \Sigma, \Gamma, \delta, q_0, q_\text{acc}, q_\text{rej})\)?

In the definition of a TM, the number of states is restricted to be finite. What is the reason for this restriction?

State 4 differences between TMs and DFAs.

True or false: Consider a TM such that the starting state \(q_0\) is also the accepting state \(q_\text{acc}\). It is possible that this TM does not halt on some inputs.

Let \(D = (Q, \Sigma, \delta, q_0, F)\) be a DFA. Define a decider TM \(M\) (specifying the components of the 7-tuple) such that \(L(M) = L(D)\).

What are the 3 components of a configuration of a Turing machine? How is a configuration typically written?

True or false: We say that a language \(K\) is a decidable language if there exists a Turing machine \(M\) such that \(K = L(M)\).

What is a decider TM?

True or false: For each decidable language, there is exactly one TM that decides it.

What do we mean when we say “a high-level description of a TM”?

What is a universal TM?

True or false: A universal TM is a decider.

What is the Church-Turing thesis?

What is the significance of the Church-Turing thesis?

True or false: \(L \subseteq \Sigma^*\) is undecidable if and only if \(\Sigma^* \backslash L\) is undecidable.

Is the following statement true, false, or hard to determine with the knowledge we have so far? \(\varnothing\) is decidable.

Is the following statement true, false, or hard to determine with the knowledge we have so far? \(\Sigma^*\) is decidable.

Is the following statement true, false, or hard to determine with the knowledge we have so far? The language \(\{\left \langle M \right\rangle : \text{$M$ is a TM with $L(M) \neq \varnothing$}\}\) is decidable.

True or false: Let \(L \subseteq \{{\texttt{0}},{\texttt{1}}\}^*\) be defined as follows: \[L = \begin{cases} \{{\texttt{0}}^n{\texttt{1}}^n : n \in \mathbb N\} & \text{if the Goldbach conjecture is true;} \\ \{{\texttt{1}}^{2^n} : n \in \mathbb N\} & \text{otherwise.} \end{cases}\] \(L\) is decidable. (Feel free to Google what Goldbach conjecture is.)

Let \(L\) and \(K\) be two languages. What does “\(L\) reduces to \(K\)” mean? Give an example of \(L\) and \(K\) such that \(L\) reduces to \(K\).

Here are the important things to keep in mind from this chapter.

Understand the key differences and similarities between DFAs and TMs are.

Given a TM, describe in English the language that it solves.

Given the description of a decidable language, come up with a TM that decides it. Note that there are various ways we can describe a TM (what we refer to as the low level, medium level, and the high level representations). You may need to present a TM in any of these levels.

What is the definition of a configuration and why is it important?

The TM computational model describes a very simple programming language.

Even though the definition of a TM is far simpler than any other programming language that you use, convince yourself that it is powerful enough to capture any computation that can be expressed in your favorite programming language. This is a fact that can be proved formally, but we do not do so since it would be extremely long and tedious.

What is the Church-Turing thesis and what does it imply? Note that we do not need to invoke the Church-Turing thesis for the previous point. The Church-Turing thesis is a much stronger claim. It captures our belief that any kind of algorithm that can be carried out by any kind of a natural process can be simulated on a Turing machine. This is not a mathematical statement that can be proved but rather a statement about our universe and the laws of physics.

The set of Turing machines is encodable (i.e. every TM can be represented using a finite-length string). This implies that an algorithm (or Turing machine) can take as input (the encoding of) another Turing machine. This idea is the basis for the universal Turing machine. It allows us to have one (universal) machine/algorithm that can simulate any other machine/algorithm that is given as input.

This chapter contains several algorithms that take encodings of DFAs as input. So these are algorithms that take as input the text representations of other algorithms. There are several interesting questions that one might want to answer given the code of an algorithm (i.e. does it accept a certain input, do two algorithms with different representations solve exactly the same computational problem, etc.) We explore some of these questions in the context of DFAs. It is important you get used to the idea of treating code (which may represent a DFA or a TM) as data/input.

This chapter also introduces the idea of a reduction (the idea of solving a computational problem by using, as a helper function, an algorithm that solves a different problem). Reductions will come up again in future chapters, and it is important that you have a solid understanding of what it means.

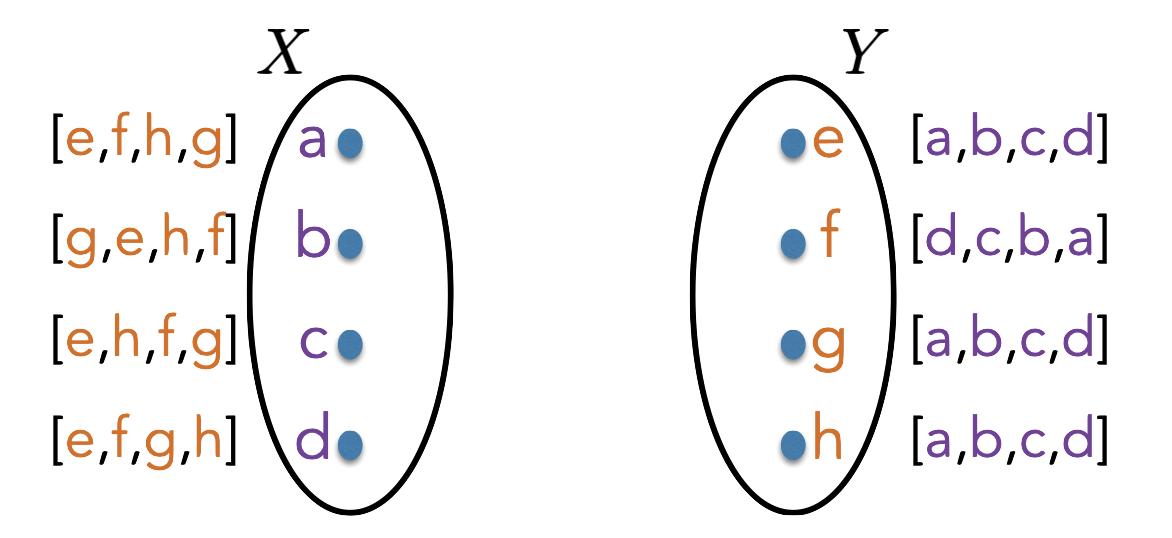

Let \(X\) and \(Y\) be two (possibly infinite) sets.

A function \(f: X \to Y\) is called injective if for any \(x,x' \in X\) such that \(x \neq x'\), we have \(f(x) \neq f(x')\). We write \(X \hookrightarrow Y\) if there exists an injective function from \(X\) to \(Y\).

A function \(f: X \to Y\) is called surjective if for all \(y \in Y\), there exists an \(x \in X\) such that \(f(x) = y\). We write \(X \twoheadrightarrow Y\) if there exists a surjective function from \(X\) to \(Y\).

A function \(f: X \to Y\) is called bijective (or one-to-one correspondence) if it is both injective and surjective. We write \(X \leftrightarrow Y\) if there exists a bijective function from \(X\) to \(Y\).

Let \(X, Y\) and \(Z\) be three (possibly infinite) sets. Then,

\(X \hookrightarrow Y\) if and only if \(Y \twoheadrightarrow X\);

if \(X \hookrightarrow Y\) and \(Y \hookrightarrow Z\), then \(X \hookrightarrow Z\);

\(X \leftrightarrow Y\) if and only if \(X \hookrightarrow Y\) and \(Y \hookrightarrow X\).

Let \(X\) and \(Y\) be two sets.

We write \(|X| = |Y|\) if \(X \leftrightarrow Y\).

We write \(|X| \leq |Y|\) (or \(|Y| \geq |X|\)) if \(X \hookrightarrow Y\), or equivalently, if \(Y \twoheadrightarrow X\).

We write \(|X| < |Y|\) (or \(|Y| > |X|\)) if it is not the case that \(|X| \geq |Y|\).

Theorem (Relationships between different types of functions) justifies the use of the notation \(=\), \(\leq\), \(\geq\), \(<\) and \(>\). The properties we would expect to hold for this type of notation indeed do hold. For example,

\(|X| = |Y|\) if and only if \(|X| \leq |Y|\) and \(|Y| \leq |X|\),

if \(|X| \leq |Y| \leq |Z|\), then \(|X| \leq |Z|\),

if \(|X| \leq |Y| < |Z|\), then \(|X| < |Z|\), and so on.

If \(X \subseteq Y\), then \(|X| \leq |Y|\) since the identity function that maps \(x \in X\) to \(x \in Y\) is an injection.

Let \(\mathbb S= \{0, 1, 4, 9,\ldots\}\) be the set of squares. Then \(|\mathbb S| = |\mathbb N|\).

Let \(\mathbb Z\) be the set of integers. Then \(|\mathbb Z| = |\mathbb N|\).

Let \(\mathbb Z\times \mathbb Z\) be the set of tuples with integer coordinates. Then \(|\mathbb Z\times \mathbb Z| = |\mathbb N|\).

Let \(\mathbb Q\) be the set of rational numbers. Then \(|\mathbb Q| = |\mathbb N|\).

Let \(\Sigma\) be a finite non-empty set. Then \(|\Sigma^*| = |\mathbb N|\).

If \(S\) is an infinite set and \(|S| \leq |\mathbb N|\), then \(|S| = |\mathbb N|\).

Prove the above theorem.

Let \(\mathbb{P} = \{2, 3, 5, 7, \ldots\}\) be the set of prime numbers. Then \(|\mathbb{P}| = |\mathbb N|\).

A set \(S\) is called countable if \(|S| \leq |\mathbb N|\).

A set \(S\) is called countably infinite if it is countable and infinite.

A set \(S\) is called uncountable if it is not countable, i.e. \(|S| > |\mathbb N|\).

Theorem (No cardinality strictly between finite and \(|\mathbb N|\)) implies that if \(S\) is countable, there are two options: either \(S\) is finite, or \(|S| = |\mathbb N|\).

Recall that a set \(S\) is encodable if for some alphabet \(\Sigma\), there is an injection from \(S\) to \(\Sigma^*\), or equivalently, \(|S| \leq |\Sigma^*|\) (Definition (Encoding elements of a set)). Since \(|\mathbb N| = |\Sigma^*|\), we see that a set is countable if and only if it is encodable. Therefore, one can show that a set is countable by showing that it is encodable. We will call this the “CS method” for showing countability.

The standard definition of countability (\(|S| \leq |\mathbb N|\)) highlights the following heuristic.

If you can list the elements of \(S\) in a way that every element appears in the list eventually, then \(S\) is countable.

The encodability definition highlights another heuristic that is familiar in computer science.

If you can “write down” each element of \(S\) using a finite number of symbols, then \(S\) is countable.

The set of all polynomials in one variable with rational coefficients is countable.

Show that the following sets are countable using the CS method.

\(\mathbb Z\times \mathbb Z\times \mathbb Z\).

The set of all functions \(f: S \to \mathbb N\), where \(S\) is some fixed finite set.

The following are some examples of how various mathematical objects have a natural representation as a function.

Sets. A set \(S \subseteq X\) can be viewed as a function \(f_S: X \to \{0,1\}\), where \(f_S(x) = 1\) if and only if \(x \in S\). This is called the characteristic function of the set. Recall that we made this observation in Important Note (Correspondence between decision problems and languages).

Finite sequences. A sequence \(s\) of length \(k\) with elements from a set \(Y\) can be viewed as a function \(f_s : \{0,1,2,\ldots,k-1\} \to Y\), where \(f_s(i)\) is the \(i\)’th element of the sequence (starting counting from \(0\)). In other words, given \(f_s\), the corresponding sequence is \((f_s(0), f_s(1), \ldots, f_s(k-1))\).

Infinite sequences. Similarly, an infinite-length sequence \(s\) with elements from \(Y\) can be viewed as a function \(f_s : \mathbb N\to Y\).

Numbers. Numbers can be viewed as functions since the binary representation of a number is a sequence of bits (possibly infinite-length).

For example, all real numbers between \(0\) and \(1\) (inclusive) is denoted by \([0,1]\). Every function \(f : \mathbb N\to \{0,1\}\) represents a real number in \([0,1]\) in binary, namely \(0.f(0)f(1)f(2)\ldots\).

Let \(X\) be any set, and let \(\mathcal{F}\) be a set of functions \(f: X \to Y\) where \(|Y| \geq 2\). If \(|X| \geq |\mathcal{F}|\), we can construct a function \(f_D : X \to Y\) such that \(f_D \not \in \mathcal{F}\).

When we apply the above lemma to construct an explicit \(f_D \not\in \mathcal{F}\), we call that diagonalizing against the set \(\mathcal{F}\). And we call \(f_D\) a diagonal element. Typically there are several choices for the definition of \(f_D\):

Different injections \(\phi : \mathcal{F}\to X\) can lead to different diagonal elements.

If \(|Y| > 2\), we have more than one choice on what value we assign to \(f_D(x_f)\) that makes \(f_D(x_f) \neq f(x_f)\) (here \(x_f\) denotes \(\phi(f)\)).

If there are elements \(x \in X\) not in the range of \(\phi\), then we can define \(f_D(x)\) any way we like.

Note that diagonalizing against a set \(\mathcal{F}\) produces an explicit function \(f_D\) not in \(\mathcal{F}\). Therefore, in situations where you wish to find an explicit object that is not in a given set, you should consider if diagonalization could be applied.

If \(\mathcal{F}\) is the set of all functions \(f: X \to Y\) (and \(|Y| \geq 2\)), then \(|X| < |\mathcal{F}|\).

Using the corollary above, give an example of an uncountable set.

For any set \(X\), \(|X| < |\wp(X)|\).

In the famous Russell’s Paradox, we consider the set of all sets that do not contain themselves. That is, we consider \[D = \{\text{set } S : S \not \in S\}.\] Then we ask whether \(D\) is in \(D\) or not. If \(D \in D\), then by the definition of \(D\), \(D \not \in D\). And if \(D \not \in D\), again by the definition of \(D\), \(D \in D\). Either way we get a contradiction. Therefore, the conclusion is that such a set \(D\) should not be mathematically definable.

This paradox is also an application of diagonalization. For any set \(X\), we cannot diagonalize against the set of all functions \(f : X \to \{0,1\}\). If we imagine diagonalizing against this set, where \(X\) is the set of all sets, the natural diagonal element \(f_D\) that comes out of diagonalization is precisely the characteristic function of the set \(D\) defined above.

For any infinite set \(S\), \(\wp(S)\) is uncountable. In particular, \(\wp(\mathbb N)\) is uncountable.

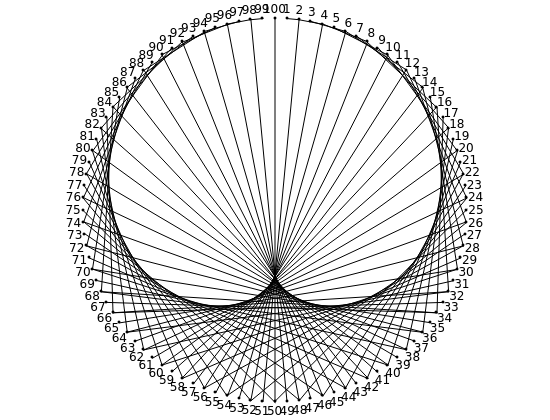

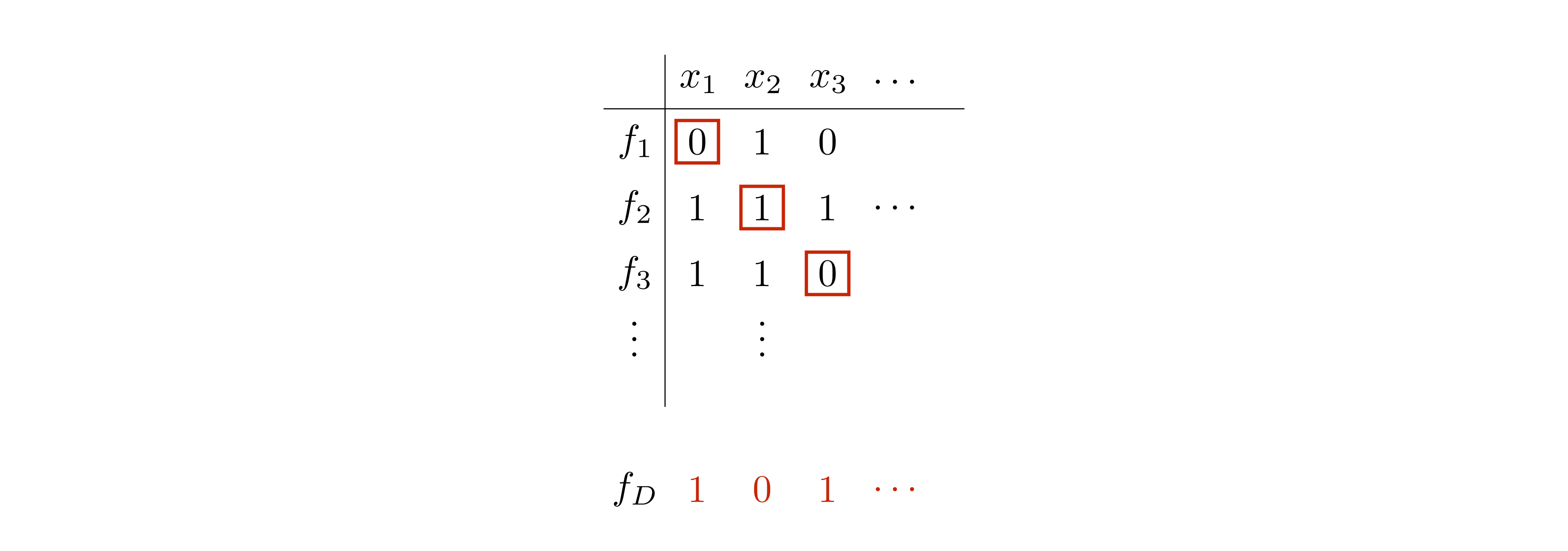

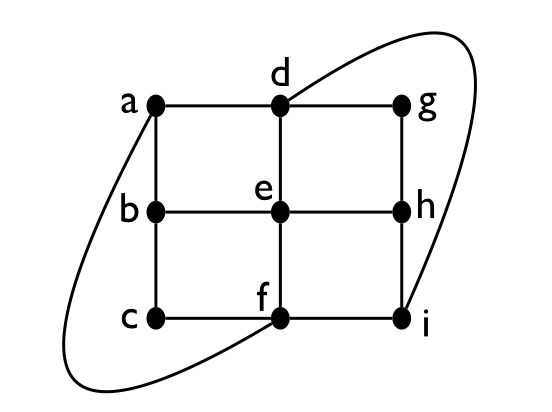

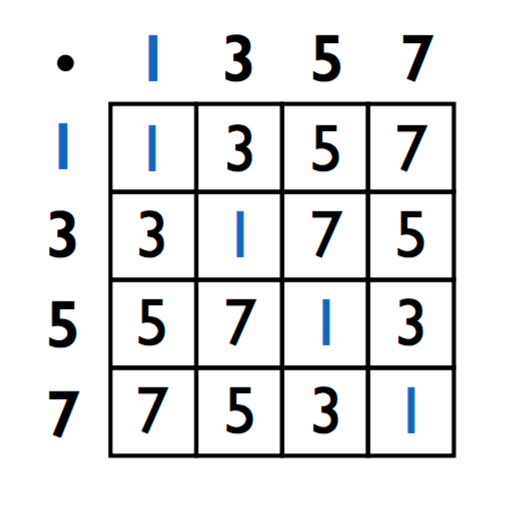

In a common scenario where diagonalization is applied, both \(\mathcal{F}\) and \(X\) are countably infinite sets. So we can list the elements of \(\mathcal{F}\) as \[f_1,\; f_2,\; f_3,\; \ldots\] as well as the elements of \(X\) as \[x_1,\; x_2,\; x_3,\; \ldots\] Then for all \(i\), define \(f_D(x_i)\) such that \(f_D(x_i) \neq f_i(x_i)\). If \(Y = \{0,1\}\), for example, \(f_D(x_i) = \textbf{not} \; f_i(x_i)\). The construction of \(f_D\) can be nicely visualized with a table, as shown below. Here, an entry corresponding to row \(f_i\) and column \(x_j\) contains the value \(f_i(x_j)\).

By construction, the diagonal element \(f_D\) differs from every \(f_i\), \(i \in \{1,2,3,\ldots\}\). In particular, it differs from \(f_i\) with respect to the input \(x_i\).

There exists an irrational real number, that is, there exists a number in \(\mathbb R\setminus \mathbb Q\).

Prove that \(\mathbb R\) is uncountable.

Let \(\Sigma\) be some finite alphabet. We denote by \(\Sigma^\infty\) the set of all infinite length words over the alphabet \(\Sigma\).

The set \(\{{\texttt{0}},{\texttt{1}}\}^\infty\) is uncountable.

Prove that if \(X\) is uncountable and \(X \subseteq Y\), then \(Y\) is also uncountable.

If we want to show that a set \(X\) is uncountable, it suffices to show that \(|X| \geq |Y|\) for some uncountable set \(Y\). Typically, a good choice for such a \(Y\) is \(\{{\texttt{0}},{\texttt{1}}\}^\infty\). And one strategy for establishing \(|X| \geq |\{{\texttt{0}},{\texttt{1}}\}^\infty|\) is to identify a subset \(S\) of \(X\) such that \(S \leftrightarrow\{{\texttt{0}},{\texttt{1}}\}^\infty\).

Show that the following sets are uncountable.

The set of all bijective functions from \(\mathbb N\) to \(\mathbb N\).

\(\{x_1x_2 x_3 \ldots \in \{{\texttt{1}},{\texttt{2}}\}^\infty : \text{ for all $n \geq 1$, $\; \sum_{i=1}^n x_i \not\equiv 0 \mod 4$}\}\)

For sets \(X, Y\), what are the definitions of \(|X| \leq |Y|\), \(|X| \geq |Y|\), \(|X| = |Y|\), and \(|X| < |Y|\)?

What is the definition of a countable set?

What is the CS method for showing that a set is countable?

True or false: There exists an infinite set \(S\) such that \(|S| < |\mathbb N|\).

True or false: \(\{{\texttt{0}},{\texttt{1}}\}^* \cap \{{\texttt{0}},{\texttt{1}}\}^\infty = \varnothing\).

True or false: \(|\{{\texttt{0}},{\texttt{1}},{\texttt{2}}\}^*| = |\mathbb Q\times \mathbb Q|\).

State the Diagonalization Lemma and specify how the diagonal function \(f_D : X \to Y\) is constructed (the construction should make sense for any \(X\) so do not assume \(X\) is finite or countably infinite).

What is the connection between Diagonalization Lemma and uncountability?

What is Cantor’s Theorem?

True or false: \(|\wp(\{{\texttt{0}},{\texttt{1}}\}^\infty)| = |\wp(\wp(\{{\texttt{0}},{\texttt{1}}\}^\infty))|\).

True or false: There is a surjection from \(\{{\texttt{0}},{\texttt{1}}\}^\infty\) to \(\{{\texttt{0}},{\texttt{1}},{\texttt{2}},{\texttt{3}}\}^\infty\).

True or false: Let \(\Sigma\) be an alphabet. The set of encodings mapping \(\mathbb N\) to \(\Sigma^*\) is countable.

True or false: Let \(\Sigma = \{{\texttt{1}}\}\) be a unary alphabet. The set of all languages over \(\Sigma\) is countable.

State a technique for proving that a given set is uncountable.

Here are the important things to keep in mind from this chapter.

The definitions of injective, surjective and bijective functions are fundamental.

The concepts of countable and uncountable sets, and their precise mathematical definitions.

When it comes to showing that a set is countable, the CS method is the best choice, almost always.

The Diagonalization Lemma is one of the most important and powerful techniques in all mathematics.

When showing that a set is uncountable, establishing an injection from \(\{0,1\}^\infty\) (or a surjection to \(\{{\texttt{0}},{\texttt{1}}\}^\infty\)) is one of the best techniques you can use.

Fix some input alphabet and tape alphabet. The set of all Turing machines \(\mathcal{T}= \{M : M \text{ is a TM}\}\) is countable.

Fix some alphabet \(\Sigma\). There are languages \(L \subseteq \Sigma^*\) that are not semi-decidable (and therefore undecidable as well).

In the previous chapter, we presented diagonalization with respect to a set of functions. However, Lemma (Diagonalization) can be applied in a slightly more general setting: it can be applied when \(\mathcal{F}\) is a set of objects that map elements of \(X\) to elements of \(Y\) (e.g. \(\mathcal{F}\) can be a set of Turing machines which map elements of \(\Sigma^*\) to elements of \(\{0,1,\infty\}\)). In other words, elements of \(\mathcal{F}\) do not have to be functions as long as they are “function-like”. Once diagonalization is applied, one gets an explicit function \(f_D : X \to Y\) such that no object in \(\mathcal{F}\) matches the input/output behavior of \(f_D\). We will illustrate this in the proof of the next theorem.

We say that a TM \(M\) self-accepts if \(M(\left \langle M \right\rangle) = 1\). The self-accepts problem is defined as the decision problem corresponding to the language \[\text{SA}_{\text{TM}}= \text{SELF-ACCEPTS}_{\text{TM}}= \{\left \langle M \right\rangle : \text{$M$ is a TM which self-accepts} \}.\] The complement problem corresponds to the language \[\overline{\text{SA}_{\text{TM}}}= \overline{\text{SELF-ACCEPTS}_{\text{TM}}}= \{\left \langle M \right\rangle : \text{$M$ is a TM which does not self-accept}\}.\] Note that if a TM \(M\) does not self-accept, then \(M(\left \langle M \right\rangle) \in \{0, \infty\}\).

The language \(\overline{\text{SA}_{\text{TM}}}\) is undecidable.

Note that the proof of the above theorem establishes something stronger: the language \(\overline{\text{SA}_{\text{TM}}}\) is not semi-decidable. To see this, recall that if \(M\) is a semi-decider for \(f_D\), by definition, this implies that for all \(x \in \Sigma^*\):

if \(f_D(x) = 1\), then \(M(x) = 1\),

if \(f_D(x) = 0\), then \(M(x) \in \{0, \infty\}\).

However, by construction of \(f_D\), we know that for input \(x = \left \langle M \right\rangle\), if \(f_D(\left \langle M \right\rangle) = 1\) then \(M(\left \langle M \right\rangle) \in \{0, \infty\}\), and if \(f_D(\left \langle M \right\rangle) = 0\), then \(M(\left \langle M \right\rangle) = 1\). So for all TMs \(M\), \(M\) fails to semi-decide \(f_D\) on input \(x = \left \langle M \right\rangle\).

The language \(\text{SA}_{\text{TM}}\) is undecidable.

The general principle that the above theorem highlights is that since decidable languages are closed under complementation, if \(L\) is an undecidable language, then \(\overline{L} = \Sigma^* \setminus L\) must also be undecidable.

We define the following languages: \[\begin{aligned} \text{ACCEPTS}_{\text{TM}}& = \{\left \langle M,x \right\rangle : \text{$M$ is a TM which accepts input $x$}\}, \\ \text{HALTS}_{\text{TM}}& = \{\left \langle M,x \right\rangle : \text{$M$ is a TM which halts on input $x$} \}, \\ \end{aligned}\]

The language \(\text{ACCEPTS}_{\text{TM}}\) is undecidable.

The language \(\text{HALTS}_{\text{TM}}\) is undecidable.

The languages \(\text{SA}_{\text{TM}}\), \(\text{ACCEPTS}_{\text{TM}}\), and \(\text{HALTS}_{\text{TM}}\) are all semi-decidable, i.e., they are all in \(\mathsf{RE}\).

\(\mathsf{R}\neq \mathsf{RE}\).

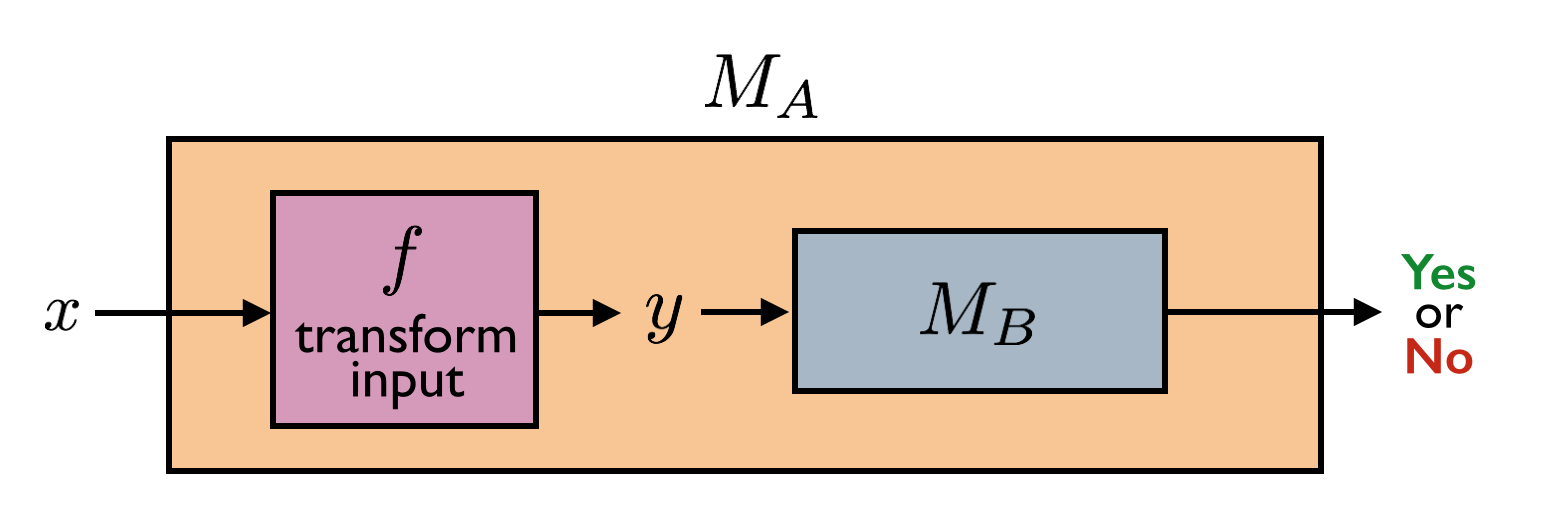

In the last section, we have used the same technique in all the proofs. It will be convenient to abstract away this technique and give it a name. Fix some alphabet \(\Sigma\). Let \(L\) and \(K\) be two languages. We say that \(L\) reduces to \(K\), written \(L \leq K\), if we are able to do the following: assume \(K\) is decidable (for the sake of argument), and then show that \(L\) is decidable by using the decider for \(K\) as a black-box subroutine (i.e. a helper function). Here the languages \(L\) and \(K\) may or may not be decidable to begin with. But observe that if \(L \leq K\) and \(K\) is decidable, then \(L\) is also decidable. Or in other words, if \(L \leq K\) and \(K \in \mathsf{R}\), then \(L \in \mathsf{R}\). Equivalently, taking the contrapositive, if \(L \leq K\) and \(L\) is undecidable, then \(K\) is also undecidable. So when \(L \leq K\), we think of \(K\) as being at least as hard as \(L\) with respect to decidability (or that \(L\) is no harder than \(K\)), which justifies using the less-than-or-equal-to sign.

Even though in the diagram above we have drawn one instance of \(M_K\) being called within \(M_L\), \(M_L\) is allowed to call \(M_K\) multiple times with different inputs.

In the literature, the above idea is formalized using the notion of a Turing reduction (with the corresponding symbol \(\leq_T\)). In order to define it formally, we need to define Turing machines that have access to an oracle. See Section (Bonus: Oracle Turing Machines) for details.

Note the difference between the notations \(|L| \leq |K|\) and \(L \leq K\). These have very different meanings. The notation \(L \leq K\) only applies to languages and denotes a reduction. The notation \(|L| \leq |K|\) applies to any two sets \(L\) and \(K\) and denotes the existence of an injective function from the set \(L\) to the set \(K\).

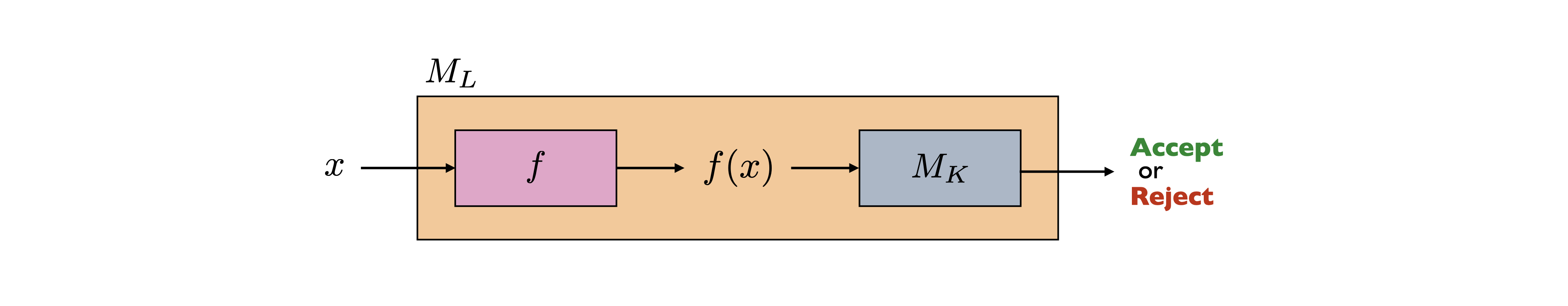

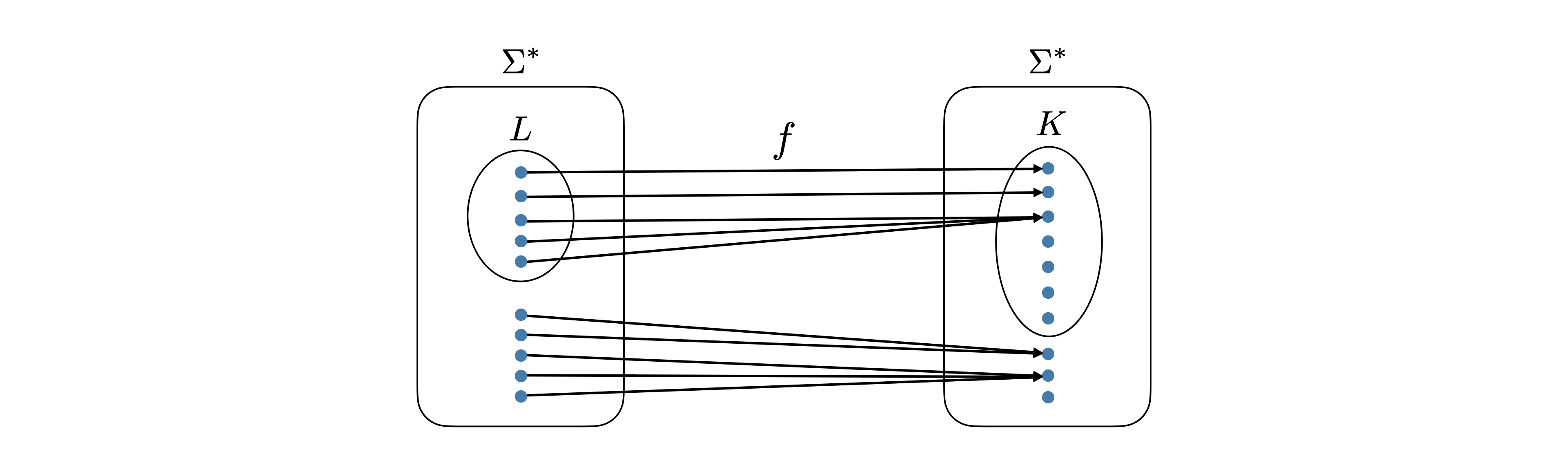

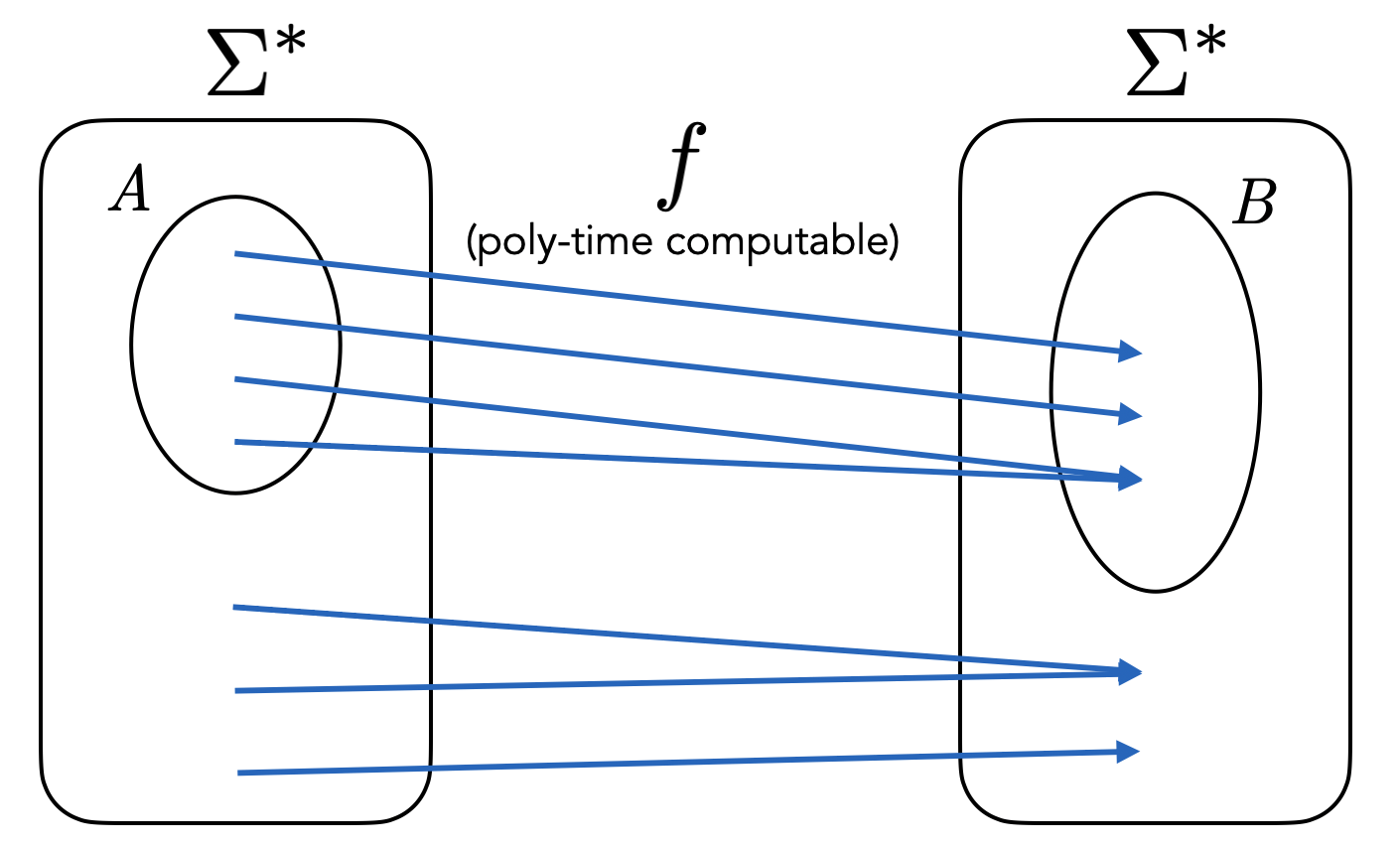

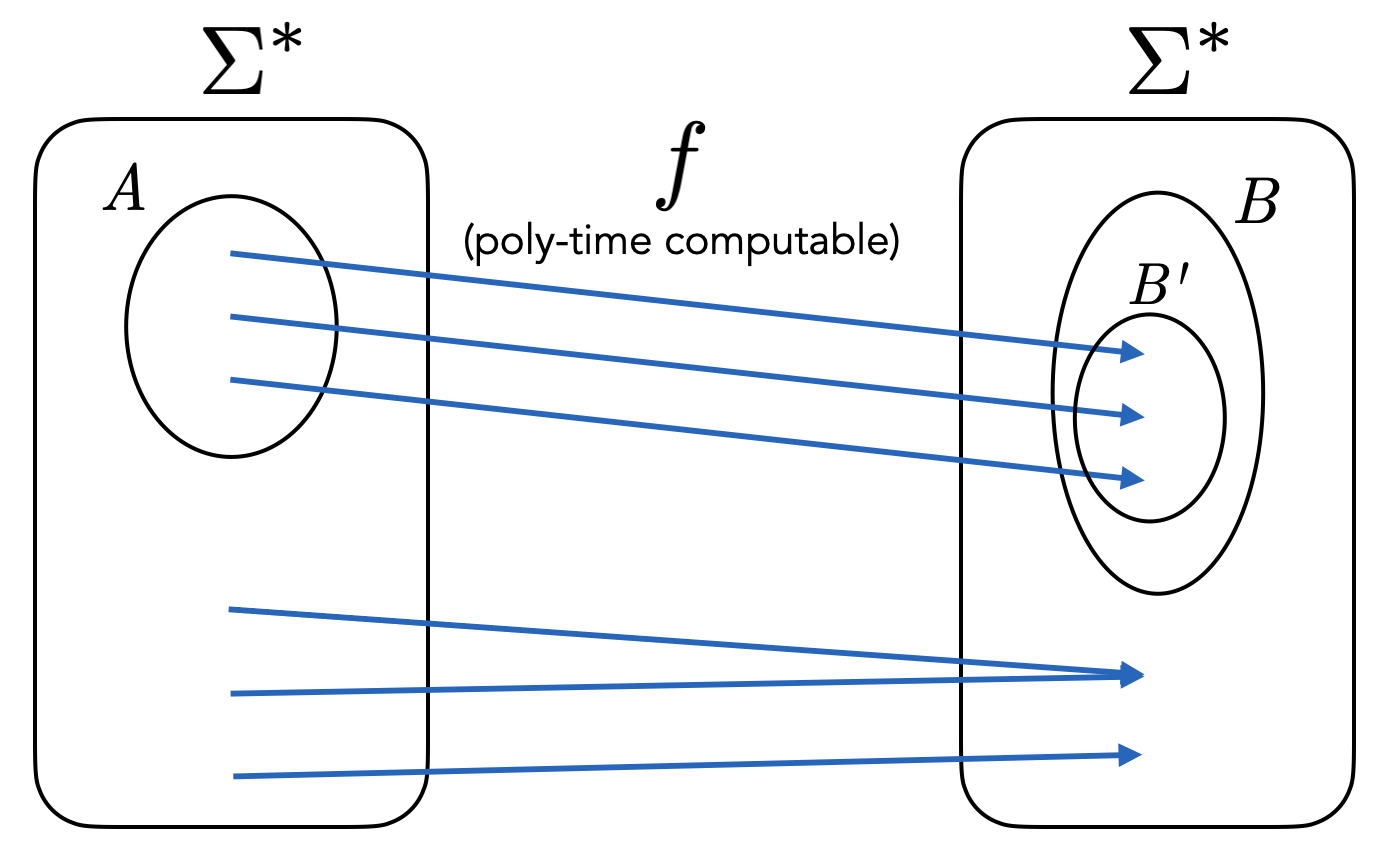

Many reductions \(L \leq K\) have the following very special structure. The TM \(M_L\) is such that on input \(x\), it first transforms \(x\) into a new string \(y = f(x)\) by applying some computable transformation \(f\). Then \(f(x)\) is fed into \(M_K\). The output of \(M_L\) is the same as the output of \(M_K\). In other words \(M_L(x) = M_K(f(x))\).

These special kinds of reductions are called mapping reductions (or many-one reductions), with the corresponding notation \(L \leq_m K\). Note that the reduction we have seen from \(\text{SA}_{\text{TM}}\) to \(\text{ACCEPTS}_{\text{TM}}\) is a mapping reduction. However, the reduction from \(\overline{\text{SA}_{\text{TM}}}\) to \(\text{SA}_{\text{TM}}\) is not a mapping reduction because the output of \(M_K\) is flipped, or in other words, we have \(M_L(x) = \textbf{not } M_K(f(x))\).

Almost all the reductions in this section will be mapping reductions.

Note that to specify a mapping reduction from \(L\) to \(K\), all one needs to do is specify a TM \(M_f\) that computes \(f : \Sigma^* \to \Sigma^*\) with the property that for any \(x \in \Sigma^*\), \(x \in L\) if and only if \(f(x) \in K\).

A TM \(M\) is satisfiable if there is some input string that \(M\) accepts. In other words, \(M\) is satisfiable if \(L(M) \neq \varnothing\). We now define the following languages: \[\begin{aligned} \text{SAT}_{\text{TM}}& = \{\left \langle M \right\rangle : \text{$M$ is a satisfiable TM}\}, \\ \text{NEQ}_{\text{TM}}& = \{\left \langle M_1,M_2 \right\rangle : \text{$M_1$ and $M_2$ are TMs with $L(M_1) \neq L(M_2)$}\}, \\ \text{HALTS-EMPTY}_{\text{TM}}& = \{\left \langle M \right\rangle : \text{$M$ is a TM such that $M(\epsilon)$ halts}\}, \\ \text{FINITE}_{\text{TM}}& = \{\left \langle M \right\rangle : \text{$M$ is a TM such that $L(M)$ is finite}\}.\end{aligned}\]

The language \(\text{SAT}_{\text{TM}}\) is undecidable.

The language \(\text{NEQ}_{\text{TM}}\) is undecidable.

Show that the following languages are undecidable.

\(\text{HALTS-EMPTY}_{\text{TM}}= \{\left \langle M \right\rangle : \text{ $M$ is a TM and $M(\epsilon)$ halts}\}\)

\(\text{FINITE}_{\text{TM}}= \{\left \langle M \right\rangle : \text{$M$ is a TM that accepts finitely many strings}\}\)

\(\text{SAT}_{\text{TM}}\leq \text{HALTS}_{\text{TM}}\).

Describe a TM that semi-decides \(\text{SAT}_{\text{TM}}\). Proof of correctness is not required.

Let \(L, K \subseteq \{{\texttt{0}},{\texttt{1}}\}^*\) be languages. Prove or disprove the following claims.

If \(L \leq K\) then \(K \leq L\).

If \(L \leq K\) and \(K\) is regular, then \(L\) is regular.

In all the reductions \(L \leq K\) presented in this chapter, the TM \(M_L\) calls the TM \(M_K\) exactly once. (And in fact, almost all the reductions in this chapter are mapping reductions). Present a reduction from \(\text{HALTS}_{\text{TM}}\) to \(\text{ACCEPTS}_{\text{TM}}\) in which \(M_\text{HALTS}\) calls \(M_\text{ACCEPTS}\) twice, and both calls/outputs are used in a meaningful way.

If a language \(L\) is semi-decidable but undecidable, then \(\overline{L}\) is not semi-decidable. In other words, if \(L \in \mathsf{RE}\setminus \mathsf{R}\), then \(\overline{L} \not \in \mathsf{RE}\).

The languages \(\overline{\text{SA}_{\text{TM}}}\), \(\overline{\text{ACCEPTS}_{\text{TM}}}\), and \(\overline{\text{HALTS}_{\text{TM}}}\) are not semi-decidable, i.e. they are not in \(\mathsf{RE}\).

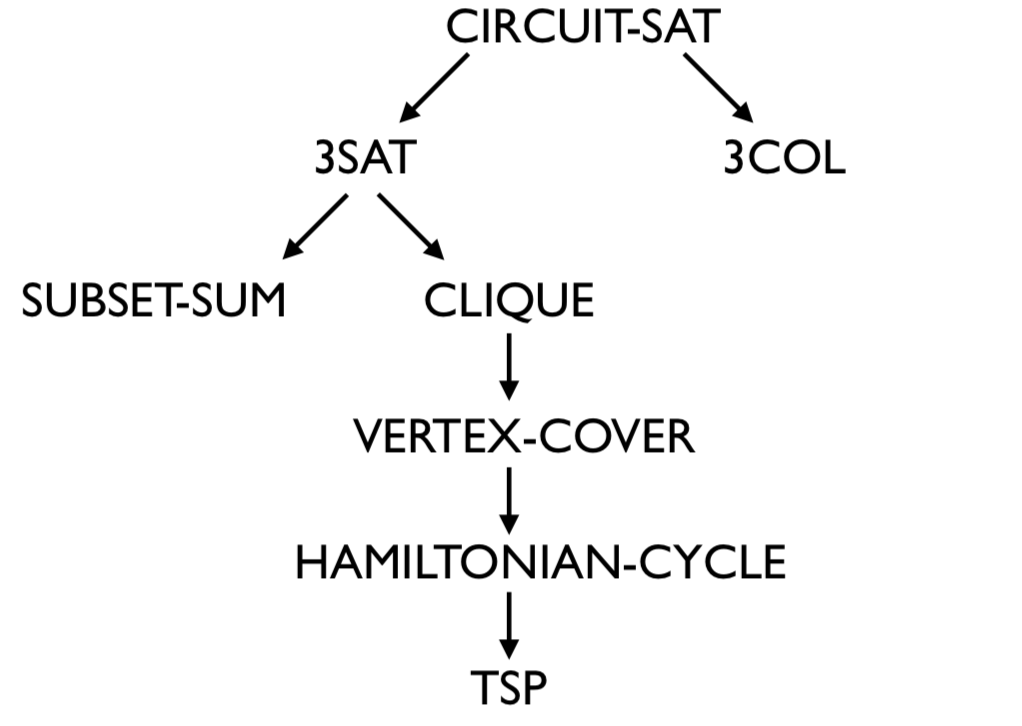

The relationship among the complexity classes \(\mathsf{REG}\), \(\mathsf{R}\), and \(\mathsf{RE}\) and can be summarized as follows.

\(\mathsf{REG}\subsetneq \mathsf{R}\) since \(\{{\texttt{0}}^n{\texttt{1}}^n : n \in \mathbb N\}\) is not regular but is decidable.

There are languages like \(\text{SA}_{\text{TM}}\), \(\text{ACCEPTS}_{\text{TM}}\), \(\text{HALTS}_{\text{TM}}\), \(\text{SAT}_{\text{TM}}\) that are undecidable (not in \(\mathsf{R}\)), but are semi-decidable (in \(\mathsf{RE}\)).

The complements of the languages above are not in \(\mathsf{RE}\).

Given some decision problem \(g : \Sigma^* \to \{0,1\}\), we define a \(g\)-oracle Turing machine (or simply \(g\)-OTM, or just OTM if \(g\) is understood from the context) as a Turing machine with an additional oracle instruction which can be used in the TM’s high-level description. The additional instruction has the following form. For any string \(x\) and any variable name \(y\) of our choosing, we are allowed to use \[y = g(x).\] After this instruction, the variable \(y\) holds the value \(g(x)\) and can be used in the rest of the Turing machine’s description. In this context, the function \(g\) is called an oracle.

We’ll use a superscript of \(g\) (e.g. \(M^g\)) to indicate that a machine is a \(g\)-OTM.

Similar to TMs, we write \(M^g(x) = 1\) if the oracle TM \(M^g\), on input \(x\), accepts. We write \(M^g(x) = 0\) if it rejects. And we write \(M^g(x) = \infty\) if it loops forever.

If \(f : \Sigma^* \to \{0,1\}\) is a decision problem such that for all \(x \in \Sigma^*\), \(M^g(x) = f(x)\), then we say \(M^g\) describes \(f\) (and the language corresponding to \(f\)).